openai

Module openai

API

Definitions

ballerinax/openai Ballerina library

Overview

OpenAI provides a suite of powerful AI models and services for natural language processing, code generation, image understanding, and more.

The ballerinax/openai package offers APIs to easily connect and interact with OpenAI's RESTful API endpoints, enabling seamless integration with models such as GPT, Whisper, and DALL·E.

Setup guide

To use the OpenAI connector, you must have access to the OpenAI API through an OpenAI account and API key.

If you do not have an OpenAI account, you can sign up for one here.

Step 1: Create/Open an OpenAI account

- Visit the OpenAI Platform.

- Sign in with your existing credentials, or create a new OpenAI account if you don’t already have one.

Step 2: Create a project

-

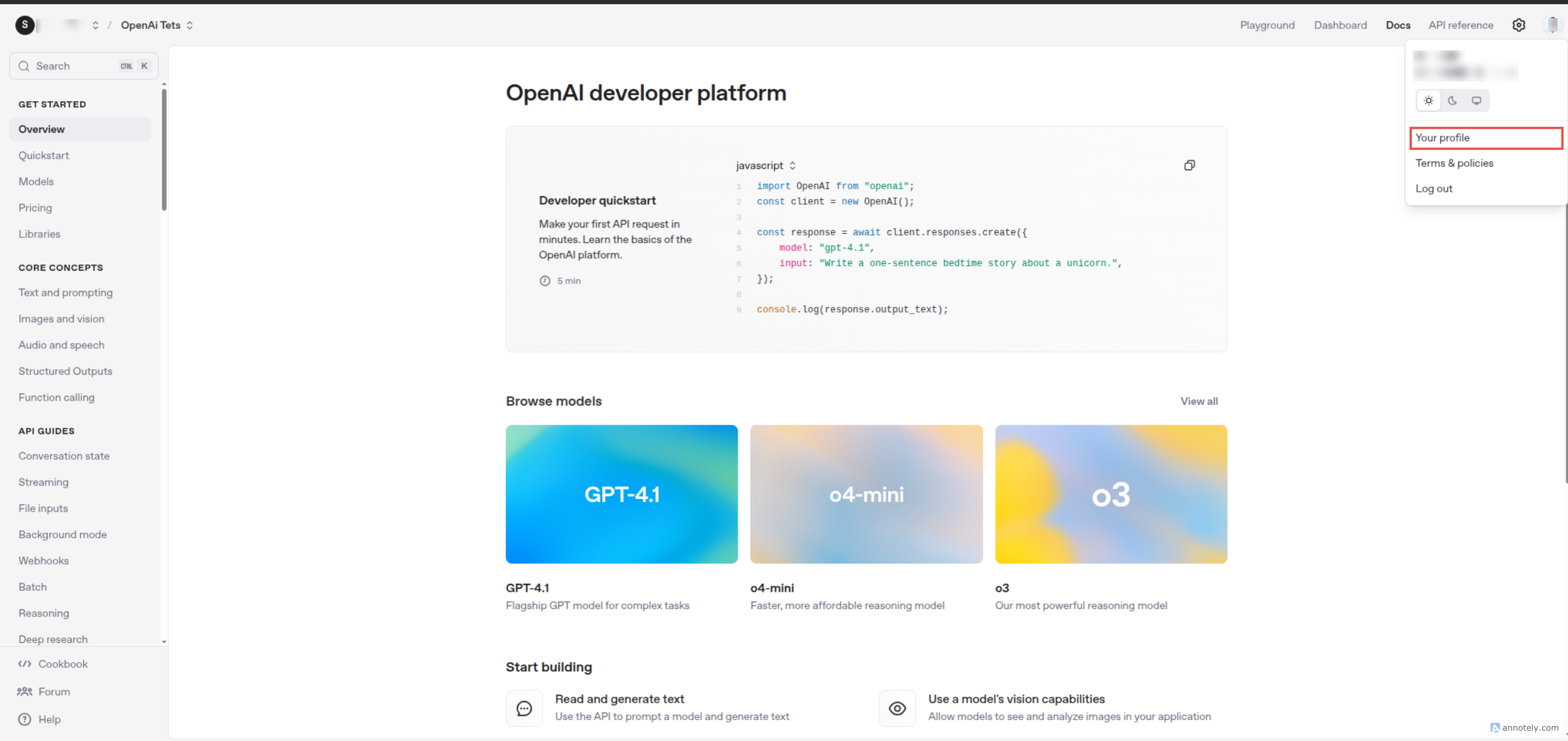

Once logged in, click on your profile icon in the top-right corner.

-

In the dropdown menu, click "Your Profile".

-

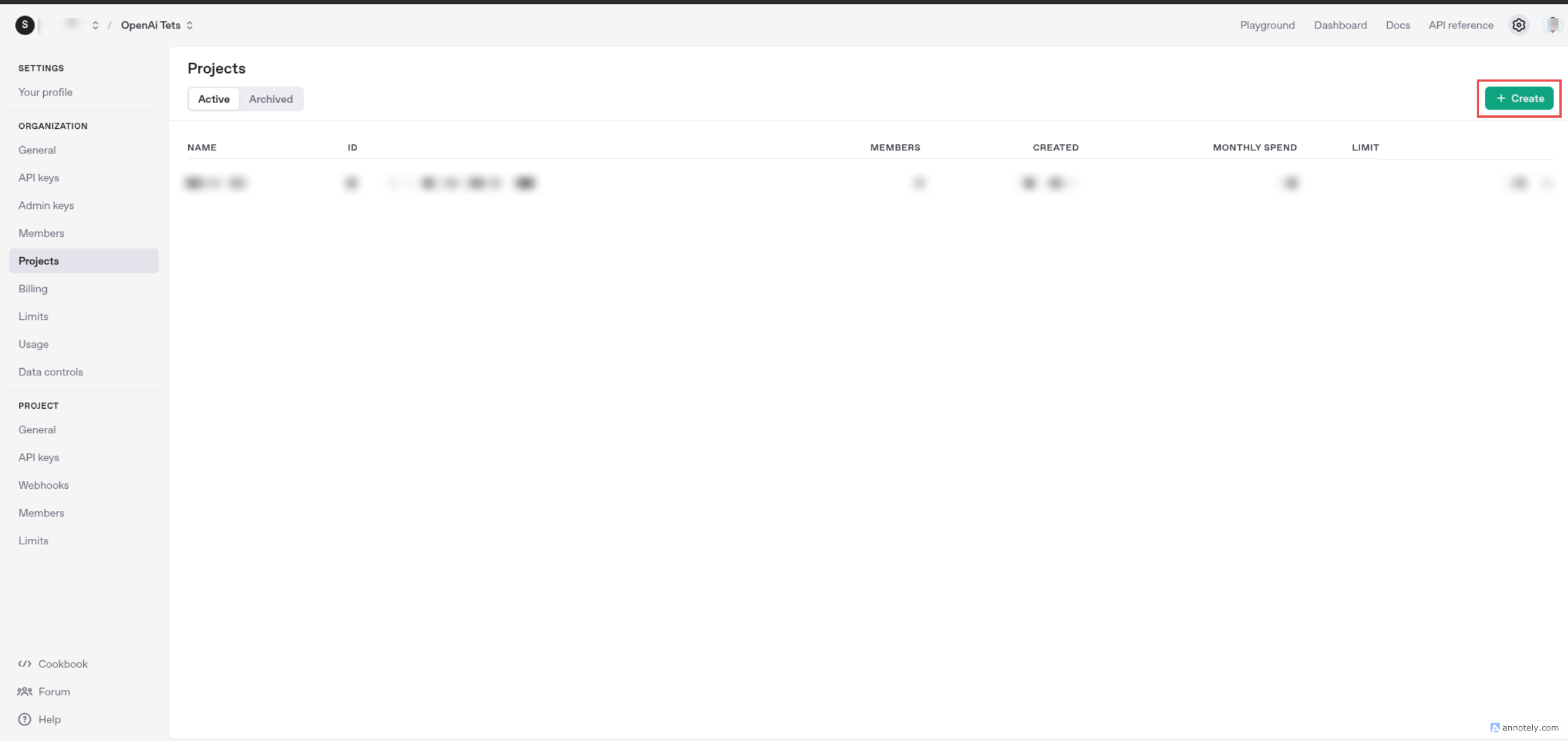

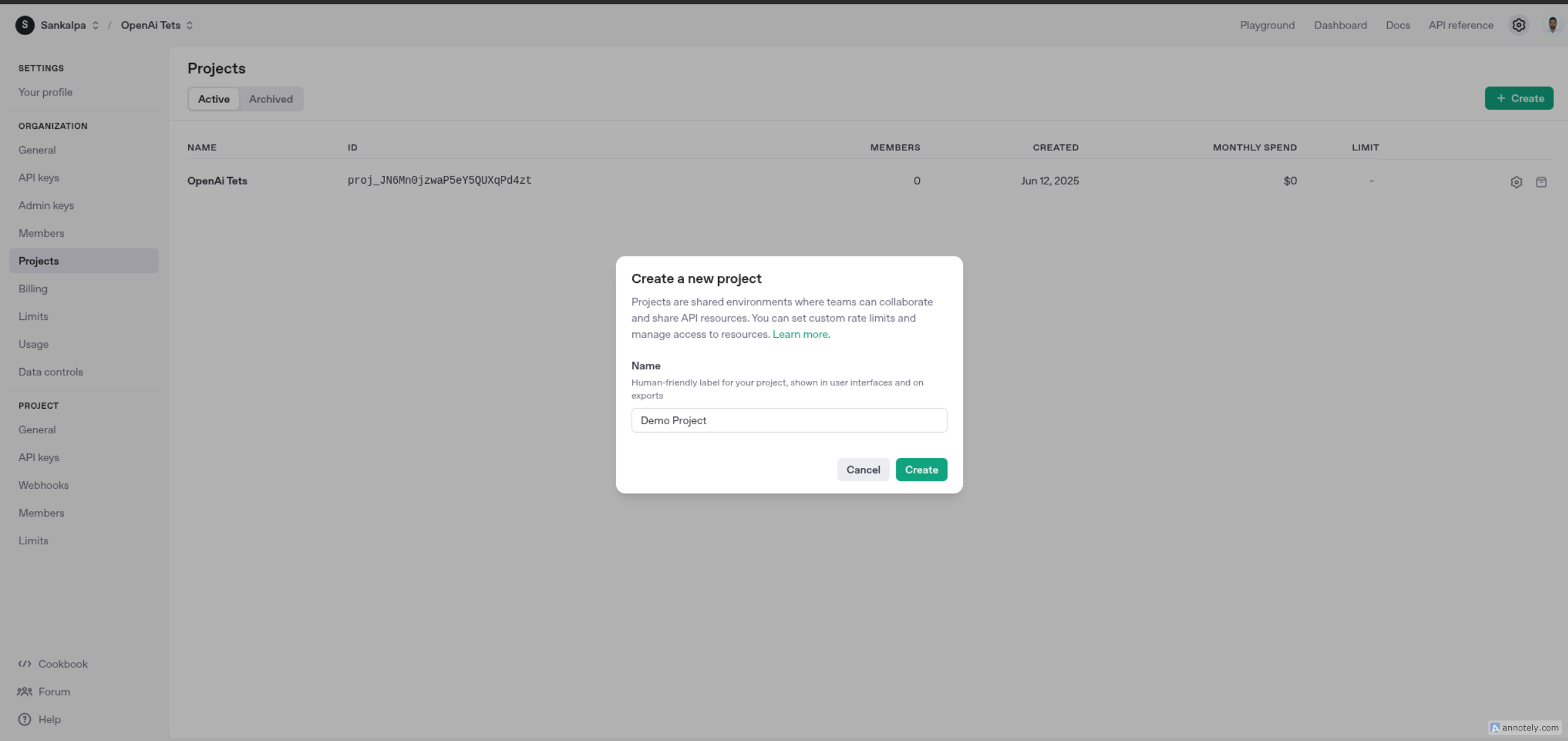

Then navigate to the "Projects" section from the sidebar to create a new project.

-

Click the "Create Project" button to create a new project.

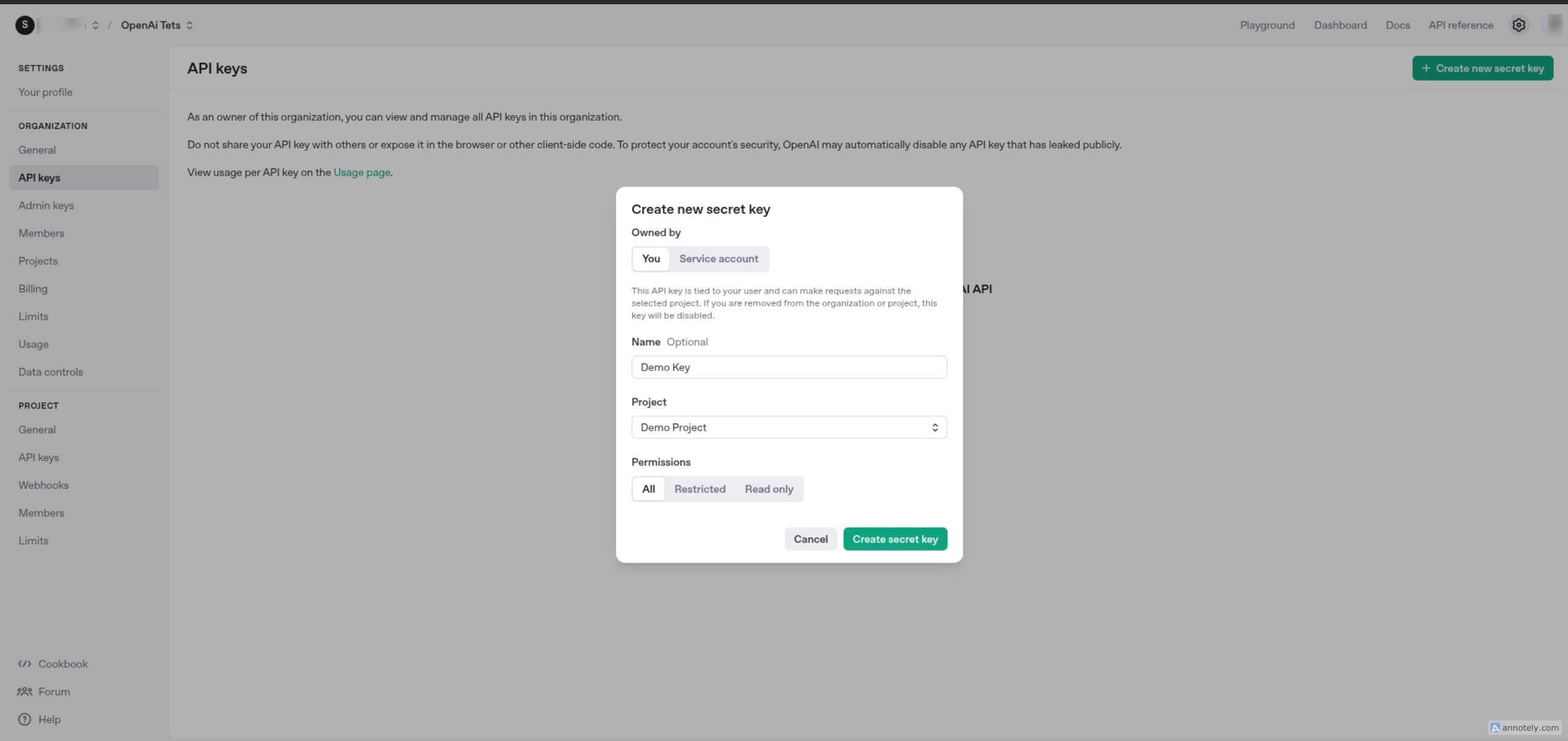

Step 3: Navigate to API Keys

-

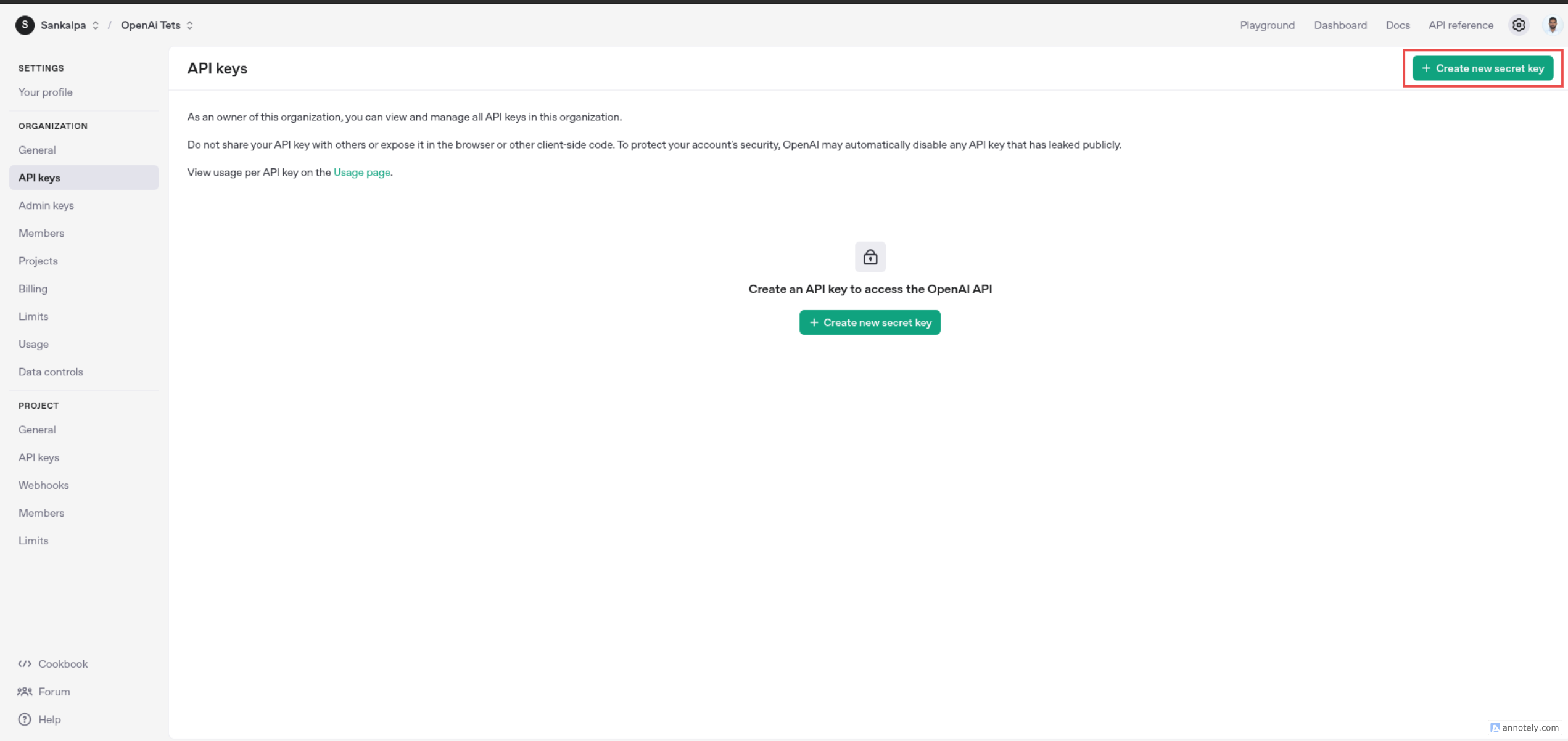

Navigate to the "API Keys" section from the sidebar and click the “+ Create new secret key” button. to create a new API Key.

-

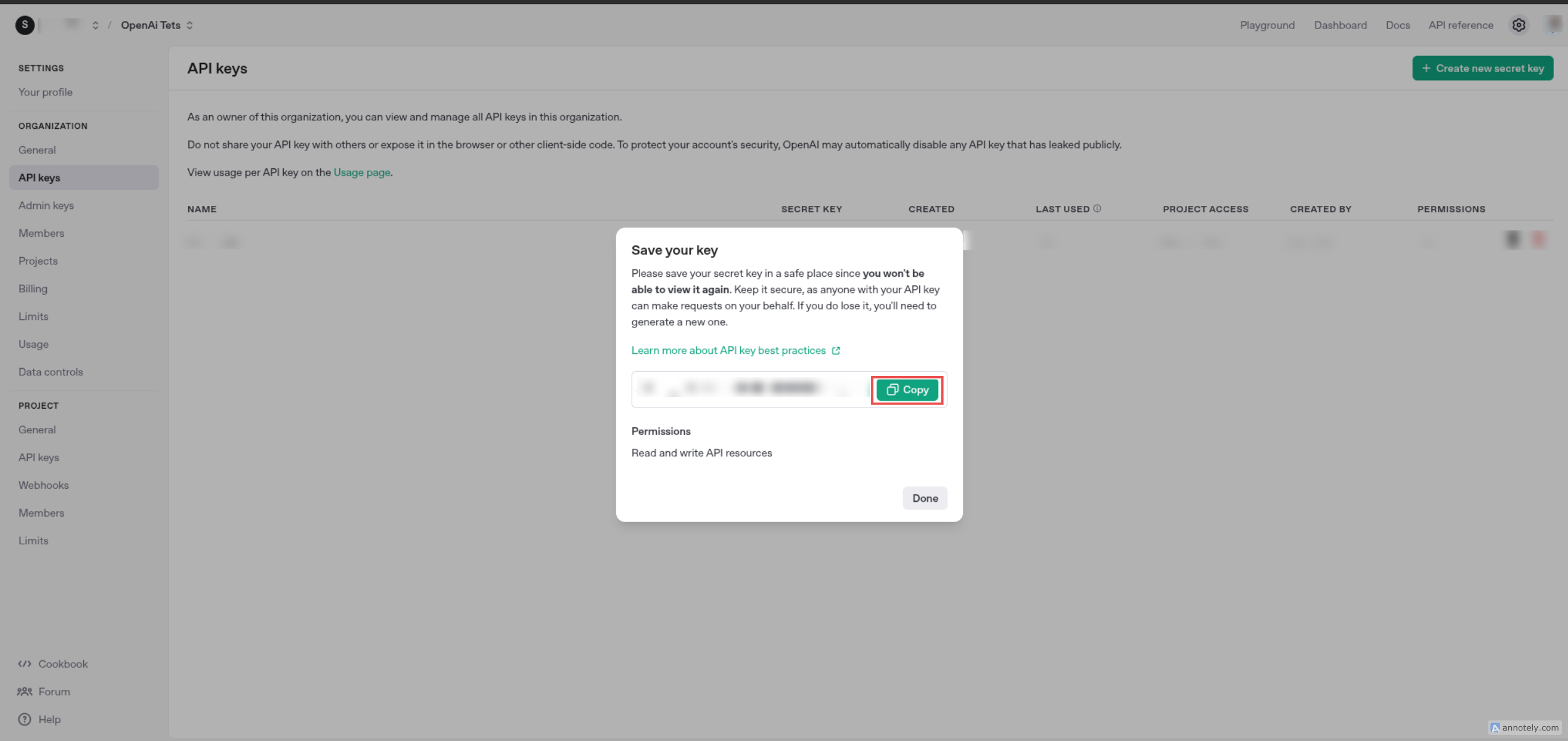

Provide a name for the key (e.g., "Connector Key") and select Project name and then confirm.

-

Copy the generated API key and store it securely. ( Note: You will not be able to view it again later.)

Quickstart

To use the OpenAI connector in your Ballerina application, update the .bal file as follows:

Step 1: Import the module

Import the openai module.

import ballerinax/openai;

Step 2: Instantiate a new connector

-

Create a

Config.tomlfile and, configure the obtained credentials in the above steps as follows:token = "<Access Token>" -

Create a

openai:ConnectionConfigwith the obtained access token and initialize the connector with it.configurable string token = ?; final openai:Client openai = check new({ auth: { token } });

Step 3: Invoke the connector operation

Now, utilize the available connector operations.

Create an Assistant

public function main() returns error? { openai:CreateAssistantRequest request = { model: "gpt-4o", name: "Math Tutor", description: null, instructions: "You are a personal math tutor.", tools: [{"type": "code_interpreter"}], toolResources: {"code_interpreter": {"file_ids": []}}, metadata: {}, topP: 1.0, temperature: 1.0, responseFormat: {"type": "text"} }; //Note: This header is required because the Assistants API is currently in beta, and OpenAI requires explicit opt-in. configurable map<string> headers = { "OpenAI-Beta": "assistants=v2" }; openai:AssistantObject response = check openai->/assistants.post(request, headers = headers); }

Step 4: Run the Ballerina application

bal run

Examples

The ballerinax/openai connector provides practical examples illustrating usage in various scenarios. Explore these examples, covering the following use cases:

- Financial Assistant - Build a Personal Finance Assistant that helps users manage their budget, track expenses, and get financial advice.

- Marketing Image Generator - Creates an assistant that takes a user’s description from the console, makes a DALL·E image with it.

Clients

openai: Client

The OpenAI REST API. Please see https://platform.openai.com/docs/api-reference for more details.

Constructor

Gets invoked to initialize the connector.

init (ConnectionConfig config, string serviceUrl)- config ConnectionConfig - The configurations to be used when initializing the

connector

- serviceUrl string "https://api.openai.com/v1" - URL of the target service

get assistants

function get assistants(map<string|string[]> headers, *ListAssistantsQueries queries) returns ListAssistantsResponse|errorReturns a list of assistants.

Parameters

- queries *ListAssistantsQueries - Queries to be sent with the request

Return Type

post assistants

function post assistants(CreateAssistantRequest payload, map<string|string[]> headers) returns AssistantObject|errorCreate an assistant with a model and instructions.

Parameters

- payload CreateAssistantRequest -

Return Type

- AssistantObject|error - OK

get assistants/[string assistantId]

function get assistants/[string assistantId](map<string|string[]> headers) returns AssistantObject|errorRetrieves an assistant.

Return Type

- AssistantObject|error - OK

post assistants/[string assistantId]

function post assistants/[string assistantId](ModifyAssistantRequest payload, map<string|string[]> headers) returns AssistantObject|errorModifies an assistant.

Parameters

- payload ModifyAssistantRequest -

Return Type

- AssistantObject|error - OK

delete assistants/[string assistantId]

function delete assistants/[string assistantId](map<string|string[]> headers) returns DeleteAssistantResponse|errorDelete an assistant.

Return Type

post audio/speech

function post audio/speech(CreateSpeechRequest payload, map<string|string[]> headers) returns byte[]|errorGenerates audio from the input text.

Parameters

- payload CreateSpeechRequest -

Return Type

- byte[]|error - OK

post audio/transcriptions

function post audio/transcriptions(CreateTranscriptionRequest payload, map<string|string[]> headers) returns InlineResponse200|errorTranscribes audio into the input language.

Parameters

- payload CreateTranscriptionRequest -

Return Type

- InlineResponse200|error - OK

post audio/translations

function post audio/translations(CreateTranslationRequest payload, map<string|string[]> headers) returns InlineResponse2001|errorTranslates audio into English.

Parameters

- payload CreateTranslationRequest -

Return Type

- InlineResponse2001|error - OK

get batches

function get batches(map<string|string[]> headers, *ListBatchesQueries queries) returns ListBatchesResponse|errorList your organization's batches.

Parameters

- queries *ListBatchesQueries - Queries to be sent with the request

Return Type

- ListBatchesResponse|error - Batch listed successfully

post batches

Creates and executes a batch from an uploaded file of requests

Parameters

- payload BatchesBody -

get batches/[string batchId]

Retrieves a batch.

post batches/[string batchId]/cancel

Cancels an in-progress batch. The batch will be in status cancelling for up to 10 minutes, before changing to cancelled, where it will have partial results (if any) available in the output file.

get chat/completions

function get chat/completions(map<string|string[]> headers, *ListChatCompletionsQueries queries) returns ChatCompletionList|errorList stored Chat Completions. Only Chat Completions that have been stored

with the store parameter set to true will be returned.

Parameters

- queries *ListChatCompletionsQueries - Queries to be sent with the request

Return Type

- ChatCompletionList|error - A list of Chat Completions

post chat/completions

function post chat/completions(CreateChatCompletionRequest payload, map<string|string[]> headers) returns CreateChatCompletionResponse|errorStarting a new project? We recommend trying Responses to take advantage of the latest OpenAI platform features. Compare Chat Completions with Responses.

Creates a model response for the given chat conversation. Learn more in the text generation, vision, and audio guides.

Parameter support can differ depending on the model used to generate the response, particularly for newer reasoning models. Parameters that are only supported for reasoning models are noted below. For the current state of unsupported parameters in reasoning models, refer to the reasoning guide.

Parameters

- payload CreateChatCompletionRequest -

Return Type

get chat/completions/[string completionId]

function get chat/completions/[string completionId](map<string|string[]> headers) returns CreateChatCompletionResponse|errorGet a stored chat completion. Only Chat Completions that have been created

with the store parameter set to true will be returned.

Return Type

- CreateChatCompletionResponse|error - A chat completion

post chat/completions/[string completionId]

function post chat/completions/[string completionId](CompletionscompletionIdBody payload, map<string|string[]> headers) returns CreateChatCompletionResponse|errorModify a stored chat completion. Only Chat Completions that have been

created with the store parameter set to true can be modified. Currently,

the only supported modification is to update the metadata field.

Parameters

- payload CompletionscompletionIdBody -

Return Type

- CreateChatCompletionResponse|error - A chat completion

delete chat/completions/[string completionId]

function delete chat/completions/[string completionId](map<string|string[]> headers) returns ChatCompletionDeleted|errorDelete a stored chat completion. Only Chat Completions that have been

created with the store parameter set to true can be deleted.

Return Type

- ChatCompletionDeleted|error - The chat completion was deleted successfully

get chat/completions/[string completionId]/messages

function get chat/completions/[string completionId]/messages(map<string|string[]> headers, *GetChatCompletionMessagesQueries queries) returns ChatCompletionMessageList|errorGet the messages in a stored chat completion. Only Chat Completions that

have been created with the store parameter set to true will be

returned.

Parameters

- queries *GetChatCompletionMessagesQueries - Queries to be sent with the request

Return Type

- ChatCompletionMessageList|error - A list of messages

post completions

function post completions(CreateCompletionRequest payload, map<string|string[]> headers) returns CreateCompletionResponse|errorCreates a completion for the provided prompt and parameters.

Parameters

- payload CreateCompletionRequest -

Return Type

post embeddings

function post embeddings(CreateEmbeddingRequest payload, map<string|string[]> headers) returns CreateEmbeddingResponse|errorCreates an embedding vector representing the input text.

Parameters

- payload CreateEmbeddingRequest -

Return Type

get evals

List evaluations for a project.

Parameters

- queries *ListEvalsQueries - Queries to be sent with the request

post evals

Create the structure of an evaluation that can be used to test a model's performance. An evaluation is a set of testing criteria and a datasource. After creating an evaluation, you can run it on different models and model parameters. We support several types of graders and datasources. For more information, see the Evals guide.

Parameters

- payload CreateEvalRequest -

get evals/[string evalId]

Get an evaluation by ID.

post evals/[string evalId]

function post evals/[string evalId](EvalsevalIdBody payload, map<string|string[]> headers) returns Eval|errorUpdate certain properties of an evaluation.

Parameters

- payload EvalsevalIdBody - Request to update an evaluation

delete evals/[string evalId]

function delete evals/[string evalId](map<string|string[]> headers) returns InlineResponse2002|errorDelete an evaluation.

Return Type

- InlineResponse2002|error - Successfully deleted the evaluation

get evals/[string evalId]/runs

function get evals/[string evalId]/runs(map<string|string[]> headers, *GetEvalRunsQueries queries) returns EvalRunList|errorGet a list of runs for an evaluation.

Parameters

- queries *GetEvalRunsQueries - Queries to be sent with the request

Return Type

- EvalRunList|error - A list of runs for the evaluation

post evals/[string evalId]/runs

function post evals/[string evalId]/runs(CreateEvalRunRequest payload, map<string|string[]> headers) returns EvalRun|errorCreate a new evaluation run. This is the endpoint that will kick off grading.

Parameters

- payload CreateEvalRunRequest -

get evals/[string evalId]/runs/[string runId]

function get evals/[string evalId]/runs/[string runId](map<string|string[]> headers) returns EvalRun|errorGet an evaluation run by ID.

post evals/[string evalId]/runs/[string runId]

function post evals/[string evalId]/runs/[string runId](map<string|string[]> headers) returns EvalRun|errorCancel an ongoing evaluation run.

delete evals/[string evalId]/runs/[string runId]

function delete evals/[string evalId]/runs/[string runId](map<string|string[]> headers) returns InlineResponse2003|errorDelete an eval run.

Return Type

- InlineResponse2003|error - Successfully deleted the eval run

get evals/[string evalId]/runs/[string runId]/output_items

function get evals/[string evalId]/runs/[string runId]/output_items(map<string|string[]> headers, *GetEvalRunOutputItemsQueries queries) returns EvalRunOutputItemList|errorGet a list of output items for an evaluation run.

Parameters

- queries *GetEvalRunOutputItemsQueries - Queries to be sent with the request

Return Type

- EvalRunOutputItemList|error - A list of output items for the evaluation run

get evals/[string evalId]/runs/[string runId]/output_items/[string outputItemId]

function get evals/[string evalId]/runs/[string runId]/output_items/[string outputItemId](map<string|string[]> headers) returns EvalRunOutputItem|errorGet an evaluation run output item by ID.

Return Type

- EvalRunOutputItem|error - The evaluation run output item

get files

function get files(map<string|string[]> headers, *ListFilesQueries queries) returns ListFilesResponse|errorReturns a list of files.

Parameters

- queries *ListFilesQueries - Queries to be sent with the request

Return Type

- ListFilesResponse|error - OK

post files

function post files(CreateFileRequest payload, map<string|string[]> headers) returns OpenAIFile|errorUpload a file that can be used across various endpoints. Individual files can be up to 512 MB, and the size of all files uploaded by one organization can be up to 100 GB.

The Assistants API supports files up to 2 million tokens and of specific file types. See the Assistants Tools guide for details.

The Fine-tuning API only supports .jsonl files. The input also has certain required formats for fine-tuning chat or completions models.

The Batch API only supports .jsonl files up to 200 MB in size. The input also has a specific required format.

Please contact us if you need to increase these storage limits.

Parameters

- payload CreateFileRequest -

Return Type

- OpenAIFile|error - OK

get files/[string fileId]

function get files/[string fileId](map<string|string[]> headers) returns OpenAIFile|errorReturns information about a specific file.

Return Type

- OpenAIFile|error - OK

delete files/[string fileId]

function delete files/[string fileId](map<string|string[]> headers) returns DeleteFileResponse|errorDelete a file.

Return Type

- DeleteFileResponse|error - OK

get files/[string fileId]/content

Returns the contents of the specified file.

get fine_tuning/checkpoints/[string fineTunedModelCheckpoint]/permissions

function get fine_tuning/checkpoints/[string fineTunedModelCheckpoint]/permissions(map<string|string[]> headers, *ListFineTuningCheckpointPermissionsQueries queries) returns ListFineTuningCheckpointPermissionResponse|errorNOTE: This endpoint requires an admin API key.

Organization owners can use this endpoint to view all permissions for a fine-tuned model checkpoint.

Parameters

- queries *ListFineTuningCheckpointPermissionsQueries - Queries to be sent with the request

Return Type

post fine_tuning/checkpoints/[string fineTunedModelCheckpoint]/permissions

function post fine_tuning/checkpoints/[string fineTunedModelCheckpoint]/permissions(CreateFineTuningCheckpointPermissionRequest payload, map<string|string[]> headers) returns ListFineTuningCheckpointPermissionResponse|errorNOTE: Calling this endpoint requires an admin API key.

This enables organization owners to share fine-tuned models with other projects in their organization.

Parameters

Return Type

delete fine_tuning/checkpoints/[string fineTunedModelCheckpoint]/permissions/[string permissionId]

function delete fine_tuning/checkpoints/[string fineTunedModelCheckpoint]/permissions/[string permissionId](map<string|string[]> headers) returns DeleteFineTuningCheckpointPermissionResponse|errorNOTE: This endpoint requires an admin API key.

Organization owners can use this endpoint to delete a permission for a fine-tuned model checkpoint.

Return Type

get fine_tuning/jobs

function get fine_tuning/jobs(map<string|string[]> headers, *ListPaginatedFineTuningJobsQueries queries) returns ListPaginatedFineTuningJobsResponse|errorList your organization's fine-tuning jobs

Parameters

- queries *ListPaginatedFineTuningJobsQueries - Queries to be sent with the request

Return Type

post fine_tuning/jobs

function post fine_tuning/jobs(CreateFineTuningJobRequest payload, map<string|string[]> headers) returns FineTuningJob|errorCreates a fine-tuning job which begins the process of creating a new model from a given dataset.

Response includes details of the enqueued job including job status and the name of the fine-tuned models once complete.

Parameters

- payload CreateFineTuningJobRequest -

Return Type

- FineTuningJob|error - OK

get fine_tuning/jobs/[string fineTuningJobId]

function get fine_tuning/jobs/[string fineTuningJobId](map<string|string[]> headers) returns FineTuningJob|errorGet info about a fine-tuning job.

Return Type

- FineTuningJob|error - OK

post fine_tuning/jobs/[string fineTuningJobId]/cancel

function post fine_tuning/jobs/[string fineTuningJobId]/cancel(map<string|string[]> headers) returns FineTuningJob|errorImmediately cancel a fine-tune job.

Return Type

- FineTuningJob|error - OK

get fine_tuning/jobs/[string fineTuningJobId]/checkpoints

function get fine_tuning/jobs/[string fineTuningJobId]/checkpoints(map<string|string[]> headers, *ListFineTuningJobCheckpointsQueries queries) returns ListFineTuningJobCheckpointsResponse|errorList checkpoints for a fine-tuning job.

Parameters

- queries *ListFineTuningJobCheckpointsQueries - Queries to be sent with the request

Return Type

get fine_tuning/jobs/[string fineTuningJobId]/events

function get fine_tuning/jobs/[string fineTuningJobId]/events(map<string|string[]> headers, *ListFineTuningEventsQueries queries) returns ListFineTuningJobEventsResponse|errorGet status updates for a fine-tuning job.

Parameters

- queries *ListFineTuningEventsQueries - Queries to be sent with the request

Return Type

post images/edits

function post images/edits(CreateImageEditRequest payload, map<string|string[]> headers) returns ImagesResponse|errorCreates an edited or extended image given one or more source images and a prompt. This endpoint only supports gpt-image-1 and dall-e-2.

Parameters

- payload CreateImageEditRequest -

Return Type

- ImagesResponse|error - OK

post images/generations

function post images/generations(CreateImageRequest payload, map<string|string[]> headers) returns ImagesResponse|errorCreates an image given a prompt. Learn more.

Parameters

- payload CreateImageRequest -

Return Type

- ImagesResponse|error - OK

post images/variations

function post images/variations(CreateImageVariationRequest payload, map<string|string[]> headers) returns ImagesResponse|errorCreates a variation of a given image. This endpoint only supports dall-e-2.

Parameters

- payload CreateImageVariationRequest -

Return Type

- ImagesResponse|error - OK

get models

function get models(map<string|string[]> headers) returns ListModelsResponse|errorLists the currently available models, and provides basic information about each one such as the owner and availability.

Return Type

- ListModelsResponse|error - OK

get models/[string model]

Retrieves a model instance, providing basic information about the model such as the owner and permissioning.

delete models/[string model]

function delete models/[string model](map<string|string[]> headers) returns DeleteModelResponse|errorDelete a fine-tuned model. You must have the Owner role in your organization to delete a model.

Return Type

- DeleteModelResponse|error - OK

post moderations

function post moderations(CreateModerationRequest payload, map<string|string[]> headers) returns CreateModerationResponse|errorClassifies if text and/or image inputs are potentially harmful. Learn more in the moderation guide.

Parameters

- payload CreateModerationRequest -

Return Type

get organization/admin_api_keys

function get organization/admin_api_keys(map<string|string[]> headers, *AdminApiKeysListQueries queries) returns ApiKeyList|errorList organization API keys

Parameters

- queries *AdminApiKeysListQueries - Queries to be sent with the request

Return Type

- ApiKeyList|error - A list of organization API keys

post organization/admin_api_keys

function post organization/admin_api_keys(OrganizationAdminApiKeysBody payload, map<string|string[]> headers) returns AdminApiKey|errorCreate an organization admin API key

Parameters

- payload OrganizationAdminApiKeysBody -

Return Type

- AdminApiKey|error - The newly created admin API key

get organization/admin_api_keys/[string keyId]

function get organization/admin_api_keys/[string keyId](map<string|string[]> headers) returns AdminApiKey|errorRetrieve a single organization API key

Return Type

- AdminApiKey|error - Details of the requested API key

delete organization/admin_api_keys/[string keyId]

function delete organization/admin_api_keys/[string keyId](map<string|string[]> headers) returns InlineResponse2004|errorDelete an organization admin API key

Return Type

- InlineResponse2004|error - Confirmation that the API key was deleted

get organization/audit_logs

function get organization/audit_logs(map<string|string[]> headers, *ListAuditLogsQueries queries) returns ListAuditLogsResponse|errorList user actions and configuration changes within this organization.

Parameters

- queries *ListAuditLogsQueries - Queries to be sent with the request

Return Type

- ListAuditLogsResponse|error - Audit logs listed successfully

get organization/certificates

function get organization/certificates(map<string|string[]> headers, *ListOrganizationCertificatesQueries queries) returns ListCertificatesResponse|errorList uploaded certificates for this organization.

Parameters

- queries *ListOrganizationCertificatesQueries - Queries to be sent with the request

Return Type

- ListCertificatesResponse|error - Certificates listed successfully

post organization/certificates

function post organization/certificates(UploadCertificateRequest payload, map<string|string[]> headers) returns Certificate|errorUpload a certificate to the organization. This does not automatically activate the certificate.

Organizations can upload up to 50 certificates.

Parameters

- payload UploadCertificateRequest - The certificate upload payload

Return Type

- Certificate|error - Certificate uploaded successfully

post organization/certificates/activate

function post organization/certificates/activate(ToggleCertificatesRequest payload, map<string|string[]> headers) returns ListCertificatesResponse|errorActivate certificates at the organization level.

You can atomically and idempotently activate up to 10 certificates at a time.

Parameters

- payload ToggleCertificatesRequest - The certificate activation payload

Return Type

- ListCertificatesResponse|error - Certificates activated successfully

post organization/certificates/deactivate

function post organization/certificates/deactivate(ToggleCertificatesRequest payload, map<string|string[]> headers) returns ListCertificatesResponse|errorDeactivate certificates at the organization level.

You can atomically and idempotently deactivate up to 10 certificates at a time.

Parameters

- payload ToggleCertificatesRequest - The certificate deactivation payload

Return Type

- ListCertificatesResponse|error - Certificates deactivated successfully

get organization/certificates/[string certificateId]

function get organization/certificates/[string certificateId](map<string|string[]> headers, *GetCertificateQueries queries) returns Certificate|errorGet a certificate that has been uploaded to the organization.

You can get a certificate regardless of whether it is active or not.

Parameters

- queries *GetCertificateQueries - Queries to be sent with the request

Return Type

- Certificate|error - Certificate retrieved successfully

post organization/certificates/[string certificateId]

function post organization/certificates/[string certificateId](ModifyCertificateRequest payload, map<string|string[]> headers) returns Certificate|errorModify a certificate. Note that only the name can be modified.

Parameters

- payload ModifyCertificateRequest - The certificate modification payload

Return Type

- Certificate|error - Certificate modified successfully

delete organization/certificates/[string certificateId]

function delete organization/certificates/[string certificateId](map<string|string[]> headers) returns DeleteCertificateResponse|errorDelete a certificate from the organization.

The certificate must be inactive for the organization and all projects.

Return Type

- DeleteCertificateResponse|error - Certificate deleted successfully

get organization/costs

function get organization/costs(map<string|string[]> headers, *UsageCostsQueries queries) returns UsageResponse|errorGet costs details for the organization.

Parameters

- queries *UsageCostsQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Costs data retrieved successfully

get organization/invites

function get organization/invites(map<string|string[]> headers, *ListInvitesQueries queries) returns InviteListResponse|errorReturns a list of invites in the organization.

Parameters

- queries *ListInvitesQueries - Queries to be sent with the request

Return Type

- InviteListResponse|error - Invites listed successfully

post organization/invites

function post organization/invites(InviteRequest payload, map<string|string[]> headers) returns Invite|errorCreate an invite for a user to the organization. The invite must be accepted by the user before they have access to the organization.

Parameters

- payload InviteRequest - The invite request payload

get organization/invites/[string inviteId]

function get organization/invites/[string inviteId](map<string|string[]> headers) returns Invite|errorRetrieves an invite.

delete organization/invites/[string inviteId]

function delete organization/invites/[string inviteId](map<string|string[]> headers) returns InviteDeleteResponse|errorDelete an invite. If the invite has already been accepted, it cannot be deleted.

Return Type

- InviteDeleteResponse|error - Invite deleted successfully

get organization/projects

function get organization/projects(map<string|string[]> headers, *ListProjectsQueries queries) returns ProjectListResponse|errorReturns a list of projects.

Parameters

- queries *ListProjectsQueries - Queries to be sent with the request

Return Type

- ProjectListResponse|error - Projects listed successfully

post organization/projects

function post organization/projects(ProjectCreateRequest payload, map<string|string[]> headers) returns Project|errorCreate a new project in the organization. Projects can be created and archived, but cannot be deleted.

Parameters

- payload ProjectCreateRequest - The project create request payload

get organization/projects/[string projectId]

function get organization/projects/[string projectId](map<string|string[]> headers) returns Project|errorRetrieves a project.

post organization/projects/[string projectId]

function post organization/projects/[string projectId](ProjectUpdateRequest payload, map<string|string[]> headers) returns Project|errorModifies a project in the organization.

Parameters

- payload ProjectUpdateRequest - The project update request payload

get organization/projects/[string projectId]/api_keys

function get organization/projects/[string projectId]/api_keys(map<string|string[]> headers, *ListProjectApiKeysQueries queries) returns ProjectApiKeyListResponse|errorReturns a list of API keys in the project.

Parameters

- queries *ListProjectApiKeysQueries - Queries to be sent with the request

Return Type

- ProjectApiKeyListResponse|error - Project API keys listed successfully

get organization/projects/[string projectId]/api_keys/[string keyId]

function get organization/projects/[string projectId]/api_keys/[string keyId](map<string|string[]> headers) returns ProjectApiKey|errorRetrieves an API key in the project.

Return Type

- ProjectApiKey|error - Project API key retrieved successfully

delete organization/projects/[string projectId]/api_keys/[string keyId]

function delete organization/projects/[string projectId]/api_keys/[string keyId](map<string|string[]> headers) returns ProjectApiKeyDeleteResponse|errorDeletes an API key from the project.

Return Type

- ProjectApiKeyDeleteResponse|error - Project API key deleted successfully

post organization/projects/[string projectId]/archive

function post organization/projects/[string projectId]/archive(map<string|string[]> headers) returns Project|errorArchives a project in the organization. Archived projects cannot be used or updated.

get organization/projects/[string projectId]/certificates

function get organization/projects/[string projectId]/certificates(map<string|string[]> headers, *ListProjectCertificatesQueries queries) returns ListCertificatesResponse|errorList certificates for this project.

Parameters

- queries *ListProjectCertificatesQueries - Queries to be sent with the request

Return Type

- ListCertificatesResponse|error - Certificates listed successfully

post organization/projects/[string projectId]/certificates/activate

function post organization/projects/[string projectId]/certificates/activate(ToggleCertificatesRequest payload, map<string|string[]> headers) returns ListCertificatesResponse|errorActivate certificates at the project level.

You can atomically and idempotently activate up to 10 certificates at a time.

Parameters

- payload ToggleCertificatesRequest - The certificate activation payload

Return Type

- ListCertificatesResponse|error - Certificates activated successfully

post organization/projects/[string projectId]/certificates/deactivate

function post organization/projects/[string projectId]/certificates/deactivate(ToggleCertificatesRequest payload, map<string|string[]> headers) returns ListCertificatesResponse|errorDeactivate certificates at the project level.

You can atomically and idempotently deactivate up to 10 certificates at a time.

Parameters

- payload ToggleCertificatesRequest - The certificate deactivation payload

Return Type

- ListCertificatesResponse|error - Certificates deactivated successfully

get organization/projects/[string projectId]/rate_limits

function get organization/projects/[string projectId]/rate_limits(map<string|string[]> headers, *ListProjectRateLimitsQueries queries) returns ProjectRateLimitListResponse|errorReturns the rate limits per model for a project.

Parameters

- queries *ListProjectRateLimitsQueries - Queries to be sent with the request

Return Type

- ProjectRateLimitListResponse|error - Project rate limits listed successfully

post organization/projects/[string projectId]/rate_limits/[string rateLimitId]

function post organization/projects/[string projectId]/rate_limits/[string rateLimitId](ProjectRateLimitUpdateRequest payload, map<string|string[]> headers) returns ProjectRateLimit|errorUpdates a project rate limit.

Parameters

- payload ProjectRateLimitUpdateRequest - The project rate limit update request payload

Return Type

- ProjectRateLimit|error - Project rate limit updated successfully

get organization/projects/[string projectId]/service_accounts

function get organization/projects/[string projectId]/service_accounts(map<string|string[]> headers, *ListProjectServiceAccountsQueries queries) returns ProjectServiceAccountListResponse|errorReturns a list of service accounts in the project.

Parameters

- queries *ListProjectServiceAccountsQueries - Queries to be sent with the request

Return Type

- ProjectServiceAccountListResponse|error - Project service accounts listed successfully

post organization/projects/[string projectId]/service_accounts

function post organization/projects/[string projectId]/service_accounts(ProjectServiceAccountCreateRequest payload, map<string|string[]> headers) returns ProjectServiceAccountCreateResponse|errorCreates a new service account in the project. This also returns an unredacted API key for the service account.

Parameters

- payload ProjectServiceAccountCreateRequest - The project service account create request payload

Return Type

- ProjectServiceAccountCreateResponse|error - Project service account created successfully

get organization/projects/[string projectId]/service_accounts/[string serviceAccountId]

function get organization/projects/[string projectId]/service_accounts/[string serviceAccountId](map<string|string[]> headers) returns ProjectServiceAccount|errorRetrieves a service account in the project.

Return Type

- ProjectServiceAccount|error - Project service account retrieved successfully

delete organization/projects/[string projectId]/service_accounts/[string serviceAccountId]

function delete organization/projects/[string projectId]/service_accounts/[string serviceAccountId](map<string|string[]> headers) returns ProjectServiceAccountDeleteResponse|errorDeletes a service account from the project.

Return Type

- ProjectServiceAccountDeleteResponse|error - Project service account deleted successfully

get organization/projects/[string projectId]/users

function get organization/projects/[string projectId]/users(map<string|string[]> headers, *ListProjectUsersQueries queries) returns ProjectUserListResponse|errorReturns a list of users in the project.

Parameters

- queries *ListProjectUsersQueries - Queries to be sent with the request

Return Type

- ProjectUserListResponse|error - Project users listed successfully

post organization/projects/[string projectId]/users

function post organization/projects/[string projectId]/users(ProjectUserCreateRequest payload, map<string|string[]> headers) returns ProjectUser|errorAdds a user to the project. Users must already be members of the organization to be added to a project.

Parameters

- payload ProjectUserCreateRequest - The project user create request payload

Return Type

- ProjectUser|error - User added to project successfully

get organization/projects/[string projectId]/users/[string userId]

function get organization/projects/[string projectId]/users/[string userId](map<string|string[]> headers) returns ProjectUser|errorRetrieves a user in the project.

Return Type

- ProjectUser|error - Project user retrieved successfully

post organization/projects/[string projectId]/users/[string userId]

function post organization/projects/[string projectId]/users/[string userId](ProjectUserUpdateRequest payload, map<string|string[]> headers) returns ProjectUser|errorModifies a user's role in the project.

Parameters

- payload ProjectUserUpdateRequest - The project user update request payload

Return Type

- ProjectUser|error - Project user's role updated successfully

delete organization/projects/[string projectId]/users/[string userId]

function delete organization/projects/[string projectId]/users/[string userId](map<string|string[]> headers) returns ProjectUserDeleteResponse|errorDeletes a user from the project.

Return Type

- ProjectUserDeleteResponse|error - Project user deleted successfully

get organization/usage/audio_speeches

function get organization/usage/audio_speeches(map<string|string[]> headers, *UsageAudioSpeechesQueries queries) returns UsageResponse|errorGet audio speeches usage details for the organization.

Parameters

- queries *UsageAudioSpeechesQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Usage data retrieved successfully

get organization/usage/audio_transcriptions

function get organization/usage/audio_transcriptions(map<string|string[]> headers, *UsageAudioTranscriptionsQueries queries) returns UsageResponse|errorGet audio transcriptions usage details for the organization.

Parameters

- queries *UsageAudioTranscriptionsQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Usage data retrieved successfully

get organization/usage/code_interpreter_sessions

function get organization/usage/code_interpreter_sessions(map<string|string[]> headers, *UsageCodeInterpreterSessionsQueries queries) returns UsageResponse|errorGet code interpreter sessions usage details for the organization.

Parameters

- queries *UsageCodeInterpreterSessionsQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Usage data retrieved successfully

get organization/usage/completions

function get organization/usage/completions(map<string|string[]> headers, *UsageCompletionsQueries queries) returns UsageResponse|errorGet completions usage details for the organization.

Parameters

- queries *UsageCompletionsQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Usage data retrieved successfully

get organization/usage/embeddings

function get organization/usage/embeddings(map<string|string[]> headers, *UsageEmbeddingsQueries queries) returns UsageResponse|errorGet embeddings usage details for the organization.

Parameters

- queries *UsageEmbeddingsQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Usage data retrieved successfully

get organization/usage/images

function get organization/usage/images(map<string|string[]> headers, *UsageImagesQueries queries) returns UsageResponse|errorGet images usage details for the organization.

Parameters

- queries *UsageImagesQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Usage data retrieved successfully

get organization/usage/moderations

function get organization/usage/moderations(map<string|string[]> headers, *UsageModerationsQueries queries) returns UsageResponse|errorGet moderations usage details for the organization.

Parameters

- queries *UsageModerationsQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Usage data retrieved successfully

get organization/usage/vector_stores

function get organization/usage/vector_stores(map<string|string[]> headers, *UsageVectorStoresQueries queries) returns UsageResponse|errorGet vector stores usage details for the organization.

Parameters

- queries *UsageVectorStoresQueries - Queries to be sent with the request

Return Type

- UsageResponse|error - Usage data retrieved successfully

get organization/users

function get organization/users(map<string|string[]> headers, *ListUsersQueries queries) returns UserListResponse|errorLists all of the users in the organization.

Parameters

- queries *ListUsersQueries - Queries to be sent with the request

Return Type

- UserListResponse|error - Users listed successfully

get organization/users/[string userId]

Retrieves a user by their identifier.

post organization/users/[string userId]

function post organization/users/[string userId](UserRoleUpdateRequest payload, map<string|string[]> headers) returns User|errorModifies a user's role in the organization.

Parameters

- payload UserRoleUpdateRequest - The new user role to modify. This must be one of

ownerormember

delete organization/users/[string userId]

function delete organization/users/[string userId](map<string|string[]> headers) returns UserDeleteResponse|errorDeletes a user from the organization.

Return Type

- UserDeleteResponse|error - User deleted successfully

post realtime/sessions

function post realtime/sessions(RealtimeSessionCreateRequest payload, map<string|string[]> headers) returns RealtimeSessionCreateResponse|errorCreate an ephemeral API token for use in client-side applications with the

Realtime API. Can be configured with the same session parameters as the

session.update client event.

It responds with a session object, plus a client_secret key which contains

a usable ephemeral API token that can be used to authenticate browser clients

for the Realtime API.

Parameters

- payload RealtimeSessionCreateRequest - Create an ephemeral API key with the given session configuration

Return Type

- RealtimeSessionCreateResponse|error - Session created successfully

post realtime/transcription_sessions

function post realtime/transcription_sessions(RealtimeTranscriptionSessionCreateRequest payload, map<string|string[]> headers) returns RealtimeTranscriptionSessionCreateResponse|errorCreate an ephemeral API token for use in client-side applications with the

Realtime API specifically for realtime transcriptions.

Can be configured with the same session parameters as the transcription_session.update client event.

It responds with a session object, plus a client_secret key which contains

a usable ephemeral API token that can be used to authenticate browser clients

for the Realtime API.

Parameters

- payload RealtimeTranscriptionSessionCreateRequest - Create an ephemeral API key with the given session configuration

Return Type

- RealtimeTranscriptionSessionCreateResponse|error - Session created successfully

post responses

function post responses(CreateResponse payload, map<string|string[]> headers) returns Response|errorCreates a model response. Provide text or image inputs to generate text or JSON outputs. Have the model call your own custom code or use built-in tools like web search or file search to use your own data as input for the model's response.

Parameters

- payload CreateResponse -

get responses/[string responseId]

function get responses/[string responseId](map<string|string[]> headers, *GetResponseQueries queries) returns Response|errorRetrieves a model response with the given ID.

Parameters

- queries *GetResponseQueries - Queries to be sent with the request

delete responses/[string responseId]

Deletes a model response with the given ID.

Return Type

- error? - OK

get responses/[string responseId]/input_items

function get responses/[string responseId]/input_items(map<string|string[]> headers, *ListInputItemsQueries queries) returns ResponseItemList|errorReturns a list of input items for a given response.

Parameters

- queries *ListInputItemsQueries - Queries to be sent with the request

Return Type

- ResponseItemList|error - OK

post threads

function post threads(CreateThreadRequest payload, map<string|string[]> headers) returns ThreadObject|errorCreate a thread.

Parameters

- payload CreateThreadRequest -

Return Type

- ThreadObject|error - OK

post threads/runs

function post threads/runs(CreateThreadAndRunRequest payload, map<string|string[]> headers) returns RunObject|errorCreate a thread and run it in one request.

Parameters

- payload CreateThreadAndRunRequest -

get threads/[string threadId]

function get threads/[string threadId](map<string|string[]> headers) returns ThreadObject|errorRetrieves a thread.

Return Type

- ThreadObject|error - OK

post threads/[string threadId]

function post threads/[string threadId](ModifyThreadRequest payload, map<string|string[]> headers) returns ThreadObject|errorModifies a thread.

Parameters

- payload ModifyThreadRequest -

Return Type

- ThreadObject|error - OK

delete threads/[string threadId]

function delete threads/[string threadId](map<string|string[]> headers) returns DeleteThreadResponse|errorDelete a thread.

Return Type

get threads/[string threadId]/messages

function get threads/[string threadId]/messages(map<string|string[]> headers, *ListMessagesQueries queries) returns ListMessagesResponse|errorReturns a list of messages for a given thread.

Parameters

- queries *ListMessagesQueries - Queries to be sent with the request

Return Type

post threads/[string threadId]/messages

function post threads/[string threadId]/messages(CreateMessageRequest payload, map<string|string[]> headers) returns MessageObject|errorCreate a message.

Parameters

- payload CreateMessageRequest -

Return Type

- MessageObject|error - OK

get threads/[string threadId]/messages/[string messageId]

function get threads/[string threadId]/messages/[string messageId](map<string|string[]> headers) returns MessageObject|errorRetrieve a message.

Return Type

- MessageObject|error - OK

post threads/[string threadId]/messages/[string messageId]

function post threads/[string threadId]/messages/[string messageId](ModifyMessageRequest payload, map<string|string[]> headers) returns MessageObject|errorModifies a message.

Parameters

- payload ModifyMessageRequest -

Return Type

- MessageObject|error - OK

delete threads/[string threadId]/messages/[string messageId]

function delete threads/[string threadId]/messages/[string messageId](map<string|string[]> headers) returns DeleteMessageResponse|errorDeletes a message.

Return Type

get threads/[string threadId]/runs

function get threads/[string threadId]/runs(map<string|string[]> headers, *ListRunsQueries queries) returns ListRunsResponse|errorReturns a list of runs belonging to a thread.

Parameters

- queries *ListRunsQueries - Queries to be sent with the request

Return Type

- ListRunsResponse|error - OK

post threads/[string threadId]/runs

function post threads/[string threadId]/runs(CreateRunRequest payload, map<string|string[]> headers, *CreateRunQueries queries) returns RunObject|errorCreate a run.

Parameters

- payload CreateRunRequest -

- queries *CreateRunQueries - Queries to be sent with the request

get threads/[string threadId]/runs/[string runId]

function get threads/[string threadId]/runs/[string runId](map<string|string[]> headers) returns RunObject|errorRetrieves a run.

post threads/[string threadId]/runs/[string runId]

function post threads/[string threadId]/runs/[string runId](ModifyRunRequest payload, map<string|string[]> headers) returns RunObject|errorModifies a run.

Parameters

- payload ModifyRunRequest -

post threads/[string threadId]/runs/[string runId]/cancel

function post threads/[string threadId]/runs/[string runId]/cancel(map<string|string[]> headers) returns RunObject|errorCancels a run that is in_progress.

get threads/[string threadId]/runs/[string runId]/steps

function get threads/[string threadId]/runs/[string runId]/steps(map<string|string[]> headers, *ListRunStepsQueries queries) returns ListRunStepsResponse|errorReturns a list of run steps belonging to a run.

Parameters

- queries *ListRunStepsQueries - Queries to be sent with the request

Return Type

get threads/[string threadId]/runs/[string runId]/steps/[string stepId]

function get threads/[string threadId]/runs/[string runId]/steps/[string stepId](map<string|string[]> headers, *GetRunStepQueries queries) returns RunStepObject|errorRetrieves a run step.

Parameters

- queries *GetRunStepQueries - Queries to be sent with the request

Return Type

- RunStepObject|error - OK

post threads/[string threadId]/runs/[string runId]/submit_tool_outputs

function post threads/[string threadId]/runs/[string runId]/submit_tool_outputs(SubmitToolOutputsRunRequest payload, map<string|string[]> headers) returns RunObject|errorWhen a run has the status: "requires_action" and required_action.type is submit_tool_outputs, this endpoint can be used to submit the outputs from the tool calls once they're all completed. All outputs must be submitted in a single request.

Parameters

- payload SubmitToolOutputsRunRequest -

post uploads

function post uploads(CreateUploadRequest payload, map<string|string[]> headers) returns Upload|errorCreates an intermediate Upload object that you can add Parts to. Currently, an Upload can accept at most 8 GB in total and expires after an hour after you create it.

Once you complete the Upload, we will create a File object that contains all the parts you uploaded. This File is usable in the rest of our platform as a regular File object.

For certain purpose values, the correct mime_type must be specified.

Please refer to documentation for the

supported MIME types for your use case.

For guidance on the proper filename extensions for each purpose, please follow the documentation on creating a File.

Parameters

- payload CreateUploadRequest -

post uploads/[string uploadId]/cancel

Cancels the Upload. No Parts may be added after an Upload is cancelled.

post uploads/[string uploadId]/complete

function post uploads/[string uploadId]/complete(CompleteUploadRequest payload, map<string|string[]> headers) returns Upload|errorCompletes the Upload.

Within the returned Upload object, there is a nested File object that is ready to use in the rest of the platform.

You can specify the order of the Parts by passing in an ordered list of the Part IDs.

The number of bytes uploaded upon completion must match the number of bytes initially specified when creating the Upload object. No Parts may be added after an Upload is completed.

Parameters

- payload CompleteUploadRequest -

post uploads/[string uploadId]/parts

function post uploads/[string uploadId]/parts(AddUploadPartRequest payload, map<string|string[]> headers) returns UploadPart|errorAdds a Part to an Upload object. A Part represents a chunk of bytes from the file you are trying to upload.

Each Part can be at most 64 MB, and you can add Parts until you hit the Upload maximum of 8 GB.

It is possible to add multiple Parts in parallel. You can decide the intended order of the Parts when you complete the Upload.

Parameters

- payload AddUploadPartRequest -

Return Type

- UploadPart|error - OK

get vector_stores

function get vector_stores(map<string|string[]> headers, *ListVectorStoresQueries queries) returns ListVectorStoresResponse|errorReturns a list of vector stores.

Parameters

- queries *ListVectorStoresQueries - Queries to be sent with the request

Return Type

post vector_stores

function post vector_stores(CreateVectorStoreRequest payload, map<string|string[]> headers) returns VectorStoreObject|errorCreate a vector store.

Parameters

- payload CreateVectorStoreRequest -

Return Type

- VectorStoreObject|error - OK

get vector_stores/[string vectorStoreId]

function get vector_stores/[string vectorStoreId](map<string|string[]> headers) returns VectorStoreObject|errorRetrieves a vector store.

Return Type

- VectorStoreObject|error - OK

post vector_stores/[string vectorStoreId]

function post vector_stores/[string vectorStoreId](UpdateVectorStoreRequest payload, map<string|string[]> headers) returns VectorStoreObject|errorModifies a vector store.

Parameters

- payload UpdateVectorStoreRequest -

Return Type

- VectorStoreObject|error - OK

delete vector_stores/[string vectorStoreId]

function delete vector_stores/[string vectorStoreId](map<string|string[]> headers) returns DeleteVectorStoreResponse|errorDelete a vector store.

Return Type

post vector_stores/[string vectorStoreId]/file_batches

function post vector_stores/[string vectorStoreId]/file_batches(CreateVectorStoreFileBatchRequest payload, map<string|string[]> headers) returns VectorStoreFileBatchObject|errorCreate a vector store file batch.

Parameters

- payload CreateVectorStoreFileBatchRequest -

Return Type

get vector_stores/[string vectorStoreId]/file_batches/[string batchId]

function get vector_stores/[string vectorStoreId]/file_batches/[string batchId](map<string|string[]> headers) returns VectorStoreFileBatchObject|errorRetrieves a vector store file batch.

Return Type

post vector_stores/[string vectorStoreId]/file_batches/[string batchId]/cancel

function post vector_stores/[string vectorStoreId]/file_batches/[string batchId]/cancel(map<string|string[]> headers) returns VectorStoreFileBatchObject|errorCancel a vector store file batch. This attempts to cancel the processing of files in this batch as soon as possible.

Return Type

get vector_stores/[string vectorStoreId]/file_batches/[string batchId]/files

function get vector_stores/[string vectorStoreId]/file_batches/[string batchId]/files(map<string|string[]> headers, *ListFilesInVectorStoreBatchQueries queries) returns ListVectorStoreFilesResponse|errorReturns a list of vector store files in a batch.

Parameters

- queries *ListFilesInVectorStoreBatchQueries - Queries to be sent with the request

Return Type

get vector_stores/[string vectorStoreId]/files

function get vector_stores/[string vectorStoreId]/files(map<string|string[]> headers, *ListVectorStoreFilesQueries queries) returns ListVectorStoreFilesResponse|errorReturns a list of vector store files.

Parameters

- queries *ListVectorStoreFilesQueries - Queries to be sent with the request

Return Type

post vector_stores/[string vectorStoreId]/files

function post vector_stores/[string vectorStoreId]/files(CreateVectorStoreFileRequest payload, map<string|string[]> headers) returns VectorStoreFileObject|errorCreate a vector store file by attaching a File to a vector store.

Parameters

- payload CreateVectorStoreFileRequest -

Return Type

get vector_stores/[string vectorStoreId]/files/[string fileId]

function get vector_stores/[string vectorStoreId]/files/[string fileId](map<string|string[]> headers) returns VectorStoreFileObject|errorRetrieves a vector store file.

Return Type

post vector_stores/[string vectorStoreId]/files/[string fileId]

function post vector_stores/[string vectorStoreId]/files/[string fileId](UpdateVectorStoreFileAttributesRequest payload, map<string|string[]> headers) returns VectorStoreFileObject|errorUpdate attributes on a vector store file.

Parameters

- payload UpdateVectorStoreFileAttributesRequest -

Return Type

delete vector_stores/[string vectorStoreId]/files/[string fileId]

function delete vector_stores/[string vectorStoreId]/files/[string fileId](map<string|string[]> headers) returns DeleteVectorStoreFileResponse|errorDelete a vector store file. This will remove the file from the vector store but the file itself will not be deleted. To delete the file, use the delete file endpoint.

Return Type

get vector_stores/[string vectorStoreId]/files/[string fileId]/content

function get vector_stores/[string vectorStoreId]/files/[string fileId]/content(map<string|string[]> headers) returns VectorStoreFileContentResponse|errorRetrieve the parsed contents of a vector store file.

Return Type

post vector_stores/[string vectorStoreId]/search

function post vector_stores/[string vectorStoreId]/search(VectorStoreSearchRequest payload, map<string|string[]> headers) returns VectorStoreSearchResultsPage|errorSearch a vector store for relevant chunks based on a query and file attributes filter.

Parameters

- payload VectorStoreSearchRequest -

Return Type

Records

openai: AddUploadPartRequest

Fields

- data record { fileContent byte[], fileName string } - The chunk of bytes for this Part

openai: AdminApiKey

Represents an individual Admin API key in an org

Fields

- owner AdminApiKeyOwner -

- lastUsedAt int? - The Unix timestamp (in seconds) of when the API key was last used

- name string - The name of the API key

- createdAt int - The Unix timestamp (in seconds) of when the API key was created

- id string - The identifier, which can be referenced in API endpoints

- redactedValue string - The redacted value of the API key

- value? string - The value of the API key. Only shown on create

- 'object string - The object type, which is always

organization.admin_api_key

openai: AdminApiKeyOwner

Fields

- role? string - Always

owner

- name? string - The name of the user

- createdAt? int - The Unix timestamp (in seconds) of when the user was created

- id? string - The identifier, which can be referenced in API endpoints

- 'type? string - Always

user

- 'object? string - The object type, which is always organization.user

openai: AdminApiKeysListQueries

Represents the Queries record for the operation: admin-api-keys-list

Fields

- 'limit int(default 20) - Maximum number of keys to return.

- after? string? - Return keys with IDs that come after this ID in the pagination order.

- 'order "asc"|"desc" (default "asc") - Order results by creation time, ascending or descending.

openai: ApiKeyList

Fields

- firstId? string -

- data? AdminApiKey[] -

- lastId? string -

- hasMore? boolean -

- 'object? string -

openai: ApproximateLocation

Fields

- country? anydata -

- city? anydata -

- timezone? anydata -

- 'type "approximate" (default "approximate") - The type of location approximation. Always

approximate

- region? anydata -

openai: AssistantObject

Represents an assistant that can call the model and use tools

Fields

- instructions string? - The system instructions that the assistant uses. The maximum length is 256,000 characters

- toolResources? AssistantObjectToolResources? -

- metadata Metadata? - Set of 16 key-value pairs that can be attached to an object. This can be useful for storing additional information about the object in a structured format, and querying for objects via API or the dashboard. Keys are strings with a maximum length of 64 characters. Values are strings with a maximum length of 512 characters

- createdAt int - The Unix timestamp (in seconds) for when the assistant was created

- description string? - The description of the assistant. The maximum length is 512 characters

- tools AssistantObjectTools[](default []) - A list of tool enabled on the assistant. There can be a maximum of 128 tools per assistant. Tools can be of types

code_interpreter,file_search, orfunction

- topP decimal?(default 1) - An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10% probability mass are considered. We generally recommend altering this or temperature but not both

- responseFormat? AssistantsApiResponseFormatOption -

- name string? - The name of the assistant. The maximum length is 256 characters

- temperature decimal?(default 1) - What sampling temperature to use, between 0 and 2. Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic

- model string - ID of the model to use. You can use the List models API to see all of your available models, or see our Model overview for descriptions of them

- id string - The identifier, which can be referenced in API endpoints

- 'object "assistant" - The object type, which is always

assistant

openai: AssistantObjectToolResources

A set of resources that are used by the assistant's tools. The resources are specific to the type of tool. For example, the code_interpreter tool requires a list of file IDs, while the file_search tool requires a list of vector store IDs

Fields

- codeInterpreter? AssistantObjectToolResourcesCodeInterpreter -

- fileSearch? AssistantObjectToolResourcesFileSearch -

openai: AssistantObjectToolResourcesCodeInterpreter

Fields

openai: AssistantObjectToolResourcesFileSearch

Fields

- vectorStoreIds? string[] - The ID of the vector store attached to this assistant. There can be a maximum of 1 vector store attached to the assistant

openai: AssistantsNamedToolChoice

Specifies a tool the model should use. Use to force the model to call a specific tool

Fields

- 'function? AssistantsNamedToolChoiceFunction -

- 'type "function"|"code_interpreter"|"file_search" - The type of the tool. If type is

function, the function name must be set

openai: AssistantsNamedToolChoiceFunction

Fields

- name string - The name of the function to call

openai: AssistantToolsCode

Fields

- 'type "code_interpreter" - The type of tool being defined:

code_interpreter

openai: AssistantToolsFileSearch

Fields

- fileSearch? AssistantToolsFileSearchFileSearch -

- 'type "file_search" - The type of tool being defined:

file_search

openai: AssistantToolsFileSearchFileSearch

Overrides for the file search tool

Fields

- maxNumResults? int - The maximum number of results the file search tool should output. The default is 20 for

gpt-4*models and 5 forgpt-3.5-turbo. This number should be between 1 and 50 inclusive. Note that the file search tool may output fewer thanmax_num_resultsresults. See the file search tool documentation for more information

- rankingOptions? FileSearchRankingOptions -

openai: AssistantToolsFileSearchTypeOnly

Fields

- 'type "file_search" - The type of tool being defined:

file_search

openai: AssistantToolsFunction

Fields

- 'function FunctionObject -

- 'type "function" - The type of tool being defined:

function

openai: AuditLog

A log of a user action or configuration change within this organization

Fields

- rateLimitUpdated? AuditLogRateLimitupdated -

- userUpdated? AuditLogUserupdated -

- project? AuditLogProject - The project that the action was scoped to. Absent for actions not scoped to projects

- certificateDeleted? AuditLogCertificatedeleted -

- serviceAccountDeleted? AuditLogServiceAccountdeleted -

- 'type AuditLogEventType - The event type

- logoutFailed? AuditLogLoginfailed -

- certificateUpdated? AuditLogCertificatecreated -

- loginFailed? AuditLogLoginfailed -

- serviceAccountUpdated? AuditLogServiceAccountupdated -

- rateLimitDeleted? AuditLogRateLimitdeleted -

- id string - The ID of this log

- projectCreated? AuditLogProjectcreated -

- certificateCreated? AuditLogCertificatecreated -

- checkpointPermissionCreated? AuditLogCheckpointPermissioncreated -

- organizationUpdated? AuditLogOrganizationupdated -

- projectUpdated? AuditLogProjectupdated -

- projectArchived? AuditLogProjectarchived -

- userAdded? AuditLogUseradded -

- inviteAccepted? AuditLogInviteaccepted -

- inviteDeleted? AuditLogInviteaccepted -

- actor AuditLogActor - The actor who performed the audit logged action

- effectiveAt int - The Unix timestamp (in seconds) of the event

- checkpointPermissionDeleted? AuditLogCheckpointPermissiondeleted -

- inviteSent? AuditLogInvitesent -

- certificatesDeactivated? AuditLogCertificatesactivated -

- serviceAccountCreated? AuditLogServiceAccountcreated -

- apiKeyCreated? AuditLogApiKeycreated -

- userDeleted? AuditLogUserdeleted -

- apiKeyDeleted? AuditLogApiKeydeleted -

- certificatesActivated? AuditLogCertificatesactivated -

- apiKeyUpdated? AuditLogApiKeyupdated -

openai: AuditLogActor

The actor who performed the audit logged action

Fields

- apiKey? AuditLogActorApiKey -

- session? AuditLogActorSession - The session in which the audit logged action was performed

- 'type? "session"|"api_key" - The type of actor. Is either

sessionorapi_key

openai: AuditLogActorApiKey

The API Key used to perform the audit logged action

Fields

- serviceAccount? AuditLogActorServiceAccount -

- id? string - The tracking id of the API key

- 'type? "user"|"service_account" - The type of API key. Can be either

userorservice_account

- user? AuditLogActorUser - The user who performed the audit logged action

openai: AuditLogActorServiceAccount

The service account that performed the audit logged action

Fields

- id? string - The service account id

openai: AuditLogActorSession

The session in which the audit logged action was performed

Fields

- ipAddress? string - The IP address from which the action was performed

- user? AuditLogActorUser - The user who performed the audit logged action

openai: AuditLogActorUser

The user who performed the audit logged action

Fields

- id? string - The user id

- email? string - The user email

openai: AuditLogApiKeycreated

The details for events with this type

Fields

- data? AuditLogApiKeycreatedData - The payload used to create the API key

- id? string - The tracking ID of the API key

openai: AuditLogApiKeycreatedData

The payload used to create the API key

Fields

- scopes? string[] - A list of scopes allowed for the API key, e.g.

["api.model.request"]

openai: AuditLogApiKeydeleted

The details for events with this type

Fields

- id? string - The tracking ID of the API key

openai: AuditLogApiKeyupdated

The details for events with this type

Fields

- changesRequested? AuditLogApiKeyupdatedChangesRequested -

- id? string - The tracking ID of the API key

openai: AuditLogApiKeyupdatedChangesRequested

The payload used to update the API key

Fields

- scopes? string[] - A list of scopes allowed for the API key, e.g.

["api.model.request"]

openai: AuditLogCertificatecreated

The details for events with this type

Fields

- name? string - The name of the certificate

- id? string - The certificate ID

openai: AuditLogCertificatedeleted

The details for events with this type

Fields

- name? string - The name of the certificate

- certificate? string - The certificate content in PEM format

- id? string - The certificate ID

openai: AuditLogCertificatesactivated

The details for events with this type

Fields

- certificates? AuditLogCertificatesactivatedCertificates[] -

openai: AuditLogCertificatesactivatedCertificates

Fields

- name? string - The name of the certificate

- id? string - The certificate ID

openai: AuditLogCheckpointPermissioncreated

The project and fine-tuned model checkpoint that the checkpoint permission was created for

Fields

- data? AuditLogCheckpointPermissioncreatedData - The payload used to create the checkpoint permission

- id? string - The ID of the checkpoint permission

openai: AuditLogCheckpointPermissioncreatedData

The payload used to create the checkpoint permission

Fields

- projectId? string - The ID of the project that the checkpoint permission was created for

- fineTunedModelCheckpoint? string - The ID of the fine-tuned model checkpoint

openai: AuditLogCheckpointPermissiondeleted

The details for events with this type

Fields

- id? string - The ID of the checkpoint permission

openai: AuditLogInviteaccepted

The details for events with this type

Fields

- id? string - The ID of the invite

openai: AuditLogInvitesent

The details for events with this type

Fields

- data? AuditLogInvitesentData - The payload used to create the invite

- id? string - The ID of the invite

openai: AuditLogInvitesentData

The payload used to create the invite

Fields

- role? string - The role the email was invited to be. Is either

ownerormember

- email? string - The email invited to the organization

openai: AuditLogLoginfailed

The details for events with this type

Fields

- errorMessage? string - The error message of the failure

- errorCode? string - The error code of the failure

openai: AuditLogOrganizationupdated

The details for events with this type

Fields

- changesRequested? AuditLogOrganizationupdatedChangesRequested -

- id? string - The organization ID

openai: AuditLogOrganizationupdatedChangesRequested

The payload used to update the organization settings

Fields

- name? string - The organization name

- description? string - The organization description

- title? string - The organization title

openai: AuditLogOrganizationupdatedChangesRequestedSettings

Fields

- threadsUiVisibility? string - Visibility of the threads page which shows messages created with the Assistants API and Playground. One of

ANY_ROLE,OWNERS, orNONE

- usageDashboardVisibility? string - Visibility of the usage dashboard which shows activity and costs for your organization. One of

ANY_ROLEorOWNERS

openai: AuditLogProject

The project that the action was scoped to. Absent for actions not scoped to projects

Fields

- name? string - The project title

- id? string - The project ID

openai: AuditLogProjectarchived

The details for events with this type

Fields

- id? string - The project ID

openai: AuditLogProjectcreated

The details for events with this type

Fields

- data? AuditLogProjectcreatedData - The payload used to create the project

- id? string - The project ID

openai: AuditLogProjectcreatedData

The payload used to create the project

Fields

- name? string - The project name

- title? string - The title of the project as seen on the dashboard

openai: AuditLogProjectupdated

The details for events with this type

Fields

- changesRequested? AuditLogProjectupdatedChangesRequested -

- id? string - The project ID

openai: AuditLogProjectupdatedChangesRequested

The payload used to update the project

Fields

- title? string - The title of the project as seen on the dashboard

openai: AuditLogRateLimitdeleted

The details for events with this type

Fields

- id? string - The rate limit ID

openai: AuditLogRateLimitupdated

The details for events with this type

Fields

- changesRequested? AuditLogRateLimitupdatedChangesRequested -

- id? string - The rate limit ID

openai: AuditLogRateLimitupdatedChangesRequested

The payload used to update the rate limits

Fields

- batch1DayMaxInputTokens? int - The maximum batch input tokens per day. Only relevant for certain models

- maxTokensPer1Minute? int - The maximum tokens per minute

- maxImagesPer1Minute? int - The maximum images per minute. Only relevant for certain models

- maxAudioMegabytesPer1Minute? int - The maximum audio megabytes per minute. Only relevant for certain models

- maxRequestsPer1Minute? int - The maximum requests per minute

- maxRequestsPer1Day? int - The maximum requests per day. Only relevant for certain models

openai: AuditLogServiceAccountcreated

The details for events with this type

Fields

- data? AuditLogServiceAccountcreatedData - The payload used to create the service account

- id? string - The service account ID

openai: AuditLogServiceAccountcreatedData

The payload used to create the service account

Fields

- role? string - The role of the service account. Is either

ownerormember

openai: AuditLogServiceAccountdeleted

The details for events with this type

Fields

- id? string - The service account ID

openai: AuditLogServiceAccountupdated

The details for events with this type

Fields

- changesRequested? AuditLogServiceAccountupdatedChangesRequested -

- id? string - The service account ID

openai: AuditLogServiceAccountupdatedChangesRequested

The payload used to updated the service account

Fields

- role? string - The role of the service account. Is either

ownerormember

openai: AuditLogUseradded

The details for events with this type

Fields

- data? AuditLogUseraddedData - The payload used to add the user to the project

- id? string - The user ID

openai: AuditLogUseraddedData

The payload used to add the user to the project

Fields

- role? string - The role of the user. Is either

ownerormember

openai: AuditLogUserdeleted

The details for events with this type

Fields

- id? string - The user ID

openai: AuditLogUserupdated

The details for events with this type

Fields

- changesRequested? AuditLogUserupdatedChangesRequested -

- id? string - The project ID

openai: AuditLogUserupdatedChangesRequested

The payload used to update the user

Fields

- role? string - The role of the user. Is either

ownerormember

openai: AutoChunkingStrategyRequestParam

The default strategy. This strategy currently uses a max_chunk_size_tokens of 800 and chunk_overlap_tokens of 400

Fields

- 'type "auto" - Always

auto

openai: Batch

Fields

- cancelledAt? int - The Unix timestamp (in seconds) for when the batch was cancelled

- metadata? Metadata? - Set of 16 key-value pairs that can be attached to an object. This can be useful for storing additional information about the object in a structured format, and querying for objects via API or the dashboard. Keys are strings with a maximum length of 64 characters. Values are strings with a maximum length of 512 characters

- requestCounts? BatchRequestCounts -

- inputFileId string - The ID of the input file for the batch

- outputFileId? string - The ID of the file containing the outputs of successfully executed requests

- errorFileId? string - The ID of the file containing the outputs of requests with errors

- createdAt int - The Unix timestamp (in seconds) for when the batch was created

- inProgressAt? int - The Unix timestamp (in seconds) for when the batch started processing

- expiredAt? int - The Unix timestamp (in seconds) for when the batch expired

- finalizingAt? int - The Unix timestamp (in seconds) for when the batch started finalizing

- completedAt? int - The Unix timestamp (in seconds) for when the batch was completed

- endpoint string - The OpenAI API endpoint used by the batch

- expiresAt? int - The Unix timestamp (in seconds) for when the batch will expire

- cancellingAt? int - The Unix timestamp (in seconds) for when the batch started cancelling

- completionWindow string - The time frame within which the batch should be processed

- id string -

- failedAt? int - The Unix timestamp (in seconds) for when the batch failed

- errors? BatchErrors -

- 'object "batch" - The object type, which is always

batch

- status "validating"|"failed"|"in_progress"|"finalizing"|"completed"|"expired"|"cancelling"|"cancelled" - The current status of the batch

openai: BatchErrors

Fields

- data? BatchErrorsData[] -

- 'object? string - The object type, which is always

list

openai: BatchErrorsData

Fields

- code? string - An error code identifying the error type

- param? string? - The name of the parameter that caused the error, if applicable

- line? int? - The line number of the input file where the error occurred, if applicable

- message? string - A human-readable message providing more details about the error

openai: BatchesBody

Fields

- endpoint "/v1/responses"|"/v1/chat/completions"|"/v1/embeddings"|"/v1/completions" - The endpoint to be used for all requests in the batch. Currently

/v1/responses,/v1/chat/completions,/v1/embeddings, and/v1/completionsare supported. Note that/v1/embeddingsbatches are also restricted to a maximum of 50,000 embedding inputs across all requests in the batch