openai.finetunes

Module openai.finetunes

API

Definitions

ballerinax/openai.finetunes Ballerina library

Overview

OpenAI, an AI research organization focused on creating friendly AI for humanity, offers the OpenAI API to access its powerful AI models for tasks like natural language processing and image generation.

The ballarinax/openai.finetunes package offers APIs to connect and interact with the fine-tuning related endpoints of OpenAI REST API v1 allowing users to customize OpenAI's AI models to meet specific needs.

Setup guide

To use the OpenAI Connector, you must have access to the OpenAI API through a OpenAI Platform account and a project under it. If you do not have a OpenAI Platform account, you can sign up for one here.

Create a OpenAI API Key

-

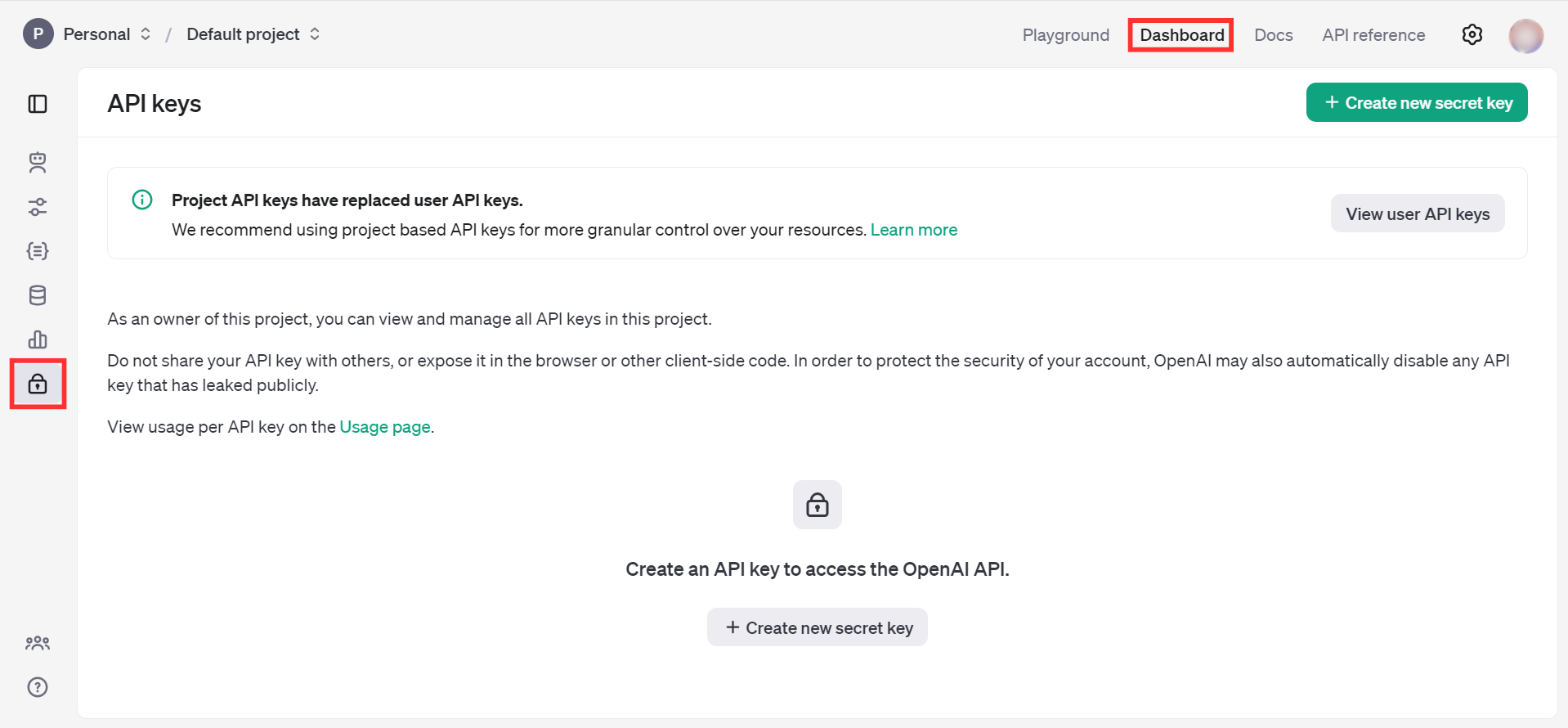

Open the OpenAI Platform Dashboard.

-

Navigate to Dashboard -> API keys

-

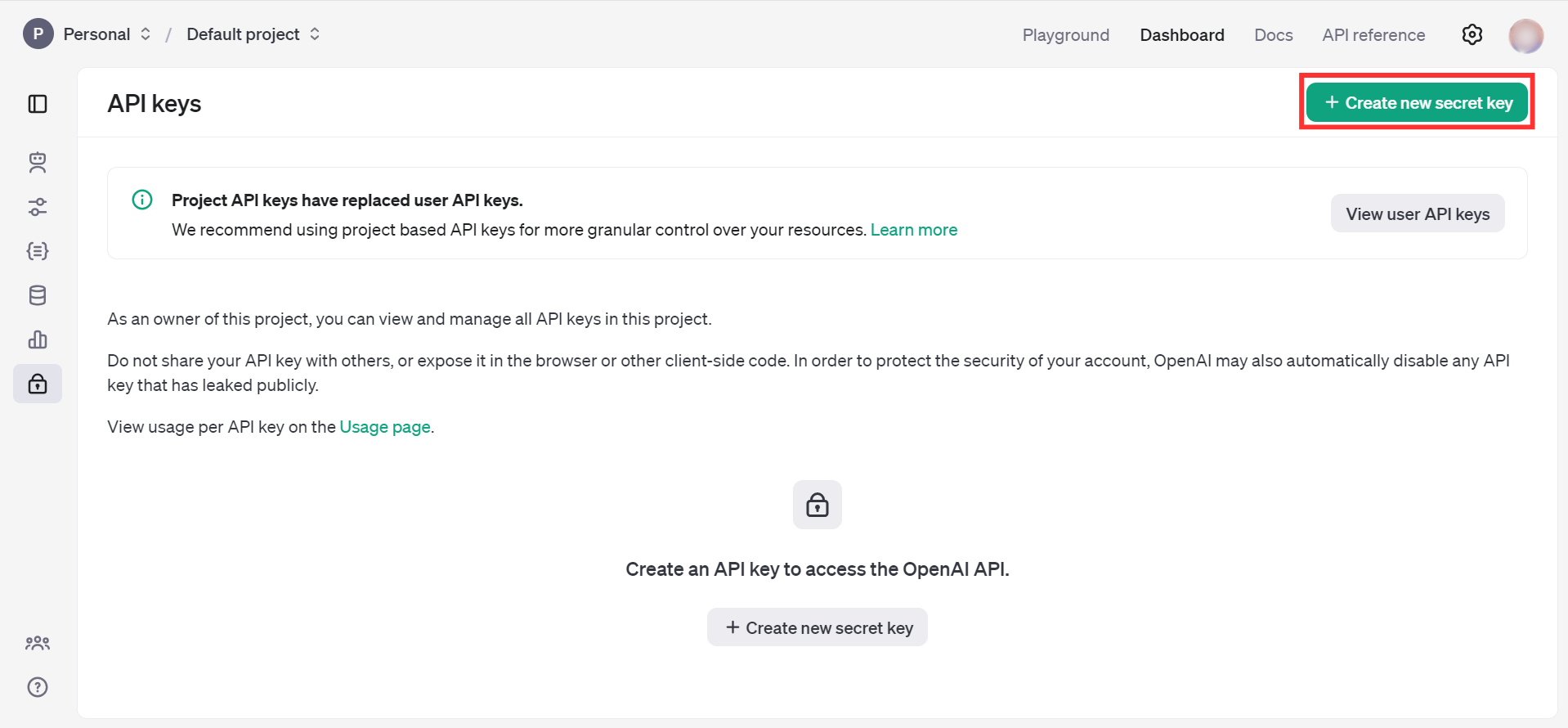

Click on the "Create new secret key" button

-

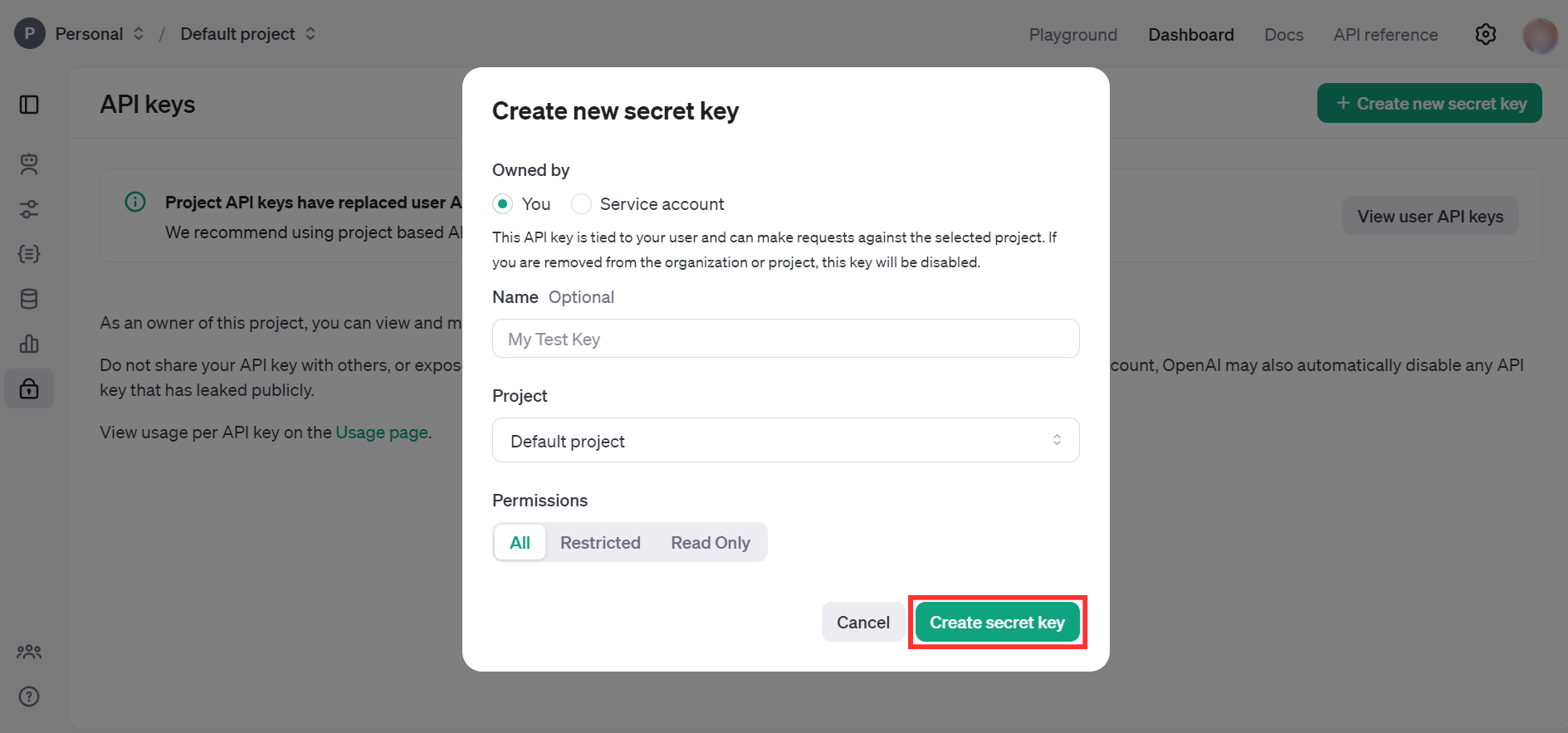

Fill the details and click on Create secret key

-

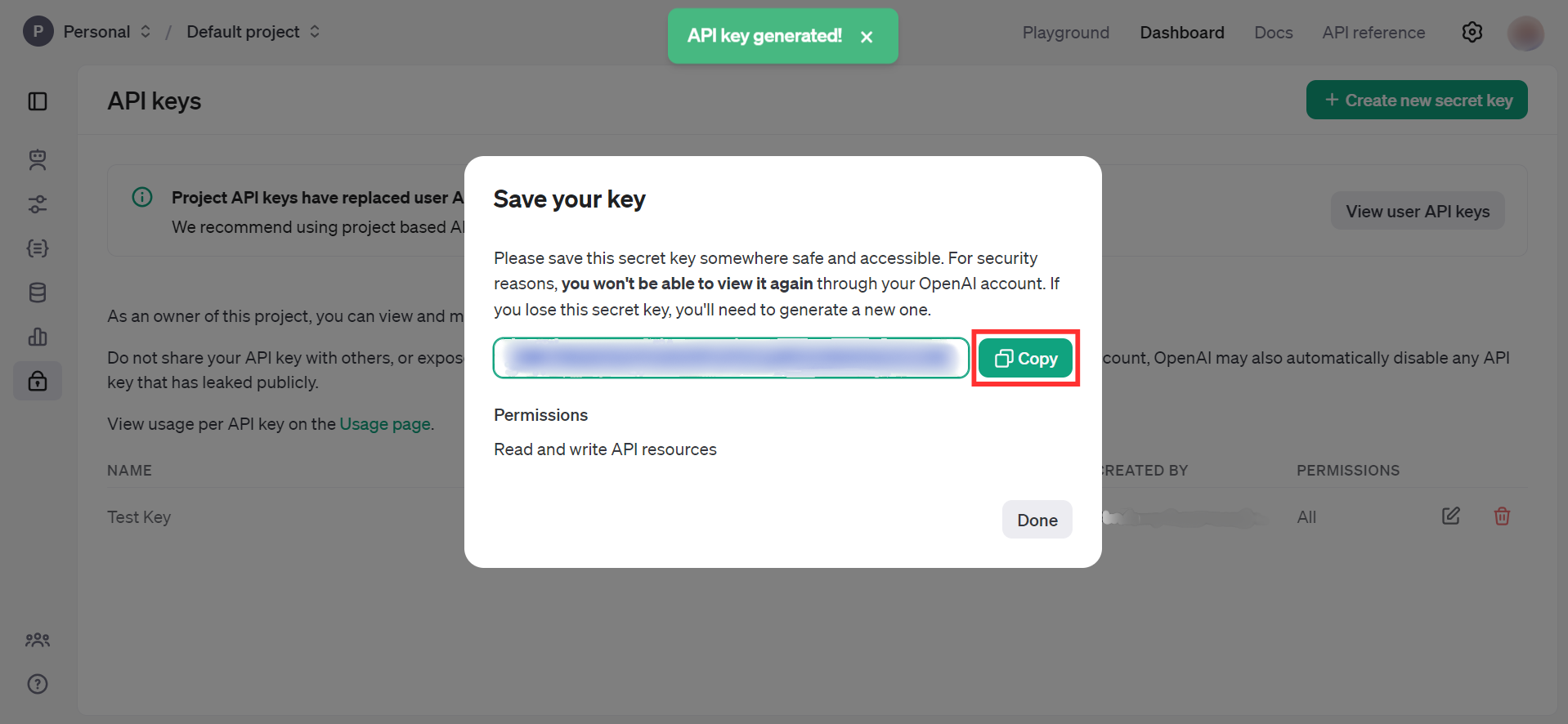

Store the API key securely to use in your application

Quickstart

To use the OpenAI Finetunes connector in your Ballerina application, update the .bal file as follows:

Step 1: Import the module

Import the openai.finetunes module.

import ballerinax/openai.finetunes; import ballerina/io;

Step 2: Instantiate a new connector

Create a finetunes:ConnectionConfig with the obtained API Key and initialize the connector.

configurable string token = ?; final finetunes:Client openAIFinetunes = check new({ auth: { token } });

Step 3: Invoke the connector operation

Now, utilize the available connector operations.

Note: First, create a sample.jsonl file in the same directory. This file should contain the training data formatted according to the guidelines provided here.

Fine tuning the gpt-3.5-turbo model

public function main() returns error? { finetunes:CreateFileRequest req = { file: {fileContent: check io:fileReadBytes("sample.jsonl"), fileName: "sample.jsonl"}, purpose: "fine-tune" }; finetunes:OpenAIFile fileRes = check openAIFinetunes->/files.post(req); string fileId = fileRes.id; finetunes:CreateFineTuningJobRequest fineTuneRequest = { model: "gpt-3.5-turbo", training_file: fileId }; finetunes:FineTuningJob fineTuneResponse = check openAIFinetunes->/fine_tuning/jobs.post(fineTuneRequest); }

Step 4: Run the Ballerina application

bal run

Examples

The OpenAI Finetunes connector provides practical examples illustrating usage in various scenarios. Explore these examples, covering the following use cases:

-

Sarcastic bot - Fine-tune the GPT-3.5-turbo model to generate sarcastic responses

-

Sports headline analyzer - Fine-tune the GPT-4o-mini model to extract structured information (player, team, sport, and gender) from sports headlines.

Clients

openai.finetunes: Client

The OpenAI REST API. Please see https://platform.openai.com/docs/api-reference for more details.

Constructor

Gets invoked to initialize the connector.

init (ConnectionConfig config, string serviceUrl)- config ConnectionConfig - The configurations to be used when initializing the

connector

- serviceUrl string "https://api.openai.com/v1" - URL of the target service

delete files/[string file_id]

function delete files/[string file_id](map<string|string[]> headers) returns DeleteFileResponse|errorDelete a file.

Return Type

- DeleteFileResponse|error - OK

delete models/[string model]

function delete models/[string model](map<string|string[]> headers) returns DeleteModelResponse|errorDelete a fine-tuned model. You must have the Owner role in your organization to delete a model.

Return Type

- DeleteModelResponse|error - OK

get files

function get files(map<string|string[]> headers, *ListFilesQueries queries) returns ListFilesResponse|errorReturns a list of files that belong to the user's organization.

Parameters

- queries *ListFilesQueries - Queries to be sent with the request

Return Type

- ListFilesResponse|error - OK

get files/[string file_id]

function get files/[string file_id](map<string|string[]> headers) returns OpenAIFile|errorReturns information about a specific file.

Return Type

- OpenAIFile|error - OK

get files/[string file_id]/content

Returns the contents of the specified file.

Return Type

- byte[]|error - OK

get fine_tuning/jobs

function get fine_tuning/jobs(map<string|string[]> headers, *ListPaginatedFineTuningJobsQueries queries) returns ListPaginatedFineTuningJobsResponse|errorList your organization's fine-tuning jobs

Parameters

- queries *ListPaginatedFineTuningJobsQueries - Queries to be sent with the request

Return Type

get fine_tuning/jobs/[string fine_tuning_job_id]

function get fine_tuning/jobs/[string fine_tuning_job_id](map<string|string[]> headers) returns FineTuningJob|errorGet info about a fine-tuning job.

Return Type

- FineTuningJob|error - OK

get fine_tuning/jobs/[string fine_tuning_job_id]/checkpoints

function get fine_tuning/jobs/[string fine_tuning_job_id]/checkpoints(map<string|string[]> headers, *ListFineTuningJobCheckpointsQueries queries) returns ListFineTuningJobCheckpointsResponse|errorList checkpoints for a fine-tuning job.

Parameters

- queries *ListFineTuningJobCheckpointsQueries - Queries to be sent with the request

Return Type

get fine_tuning/jobs/[string fine_tuning_job_id]/events

function get fine_tuning/jobs/[string fine_tuning_job_id]/events(map<string|string[]> headers, *ListFineTuningEventsQueries queries) returns ListFineTuningJobEventsResponse|errorGet status updates for a fine-tuning job.

Parameters

- queries *ListFineTuningEventsQueries - Queries to be sent with the request

Return Type

get models

function get models(map<string|string[]> headers) returns ListModelsResponse|errorLists the currently available models, and provides basic information about each one such as the owner and availability.

Return Type

- ListModelsResponse|error - OK

get models/[string model]

Retrieves a model instance, providing basic information about the model such as the owner and permissioning.

post files

function post files(CreateFileRequest payload, map<string|string[]> headers) returns OpenAIFile|errorUpload a file that can be used across various endpoints. Individual files can be up to 512 MB, and the size of all files uploaded by one organization can be up to 100 GB.

The Assistants API supports files up to 2 million tokens and of specific file types. See the Assistants Tools guide for details.

The Fine-tuning API only supports .jsonl files. The input also has certain required formats for fine-tuning chat or completions models.

The Batch API only supports .jsonl files up to 100 MB in size. The input also has a specific required format.

Please contact us if you need to increase these storage limits.

Parameters

- payload CreateFileRequest -

Return Type

- OpenAIFile|error - OK

post fine_tuning/jobs

function post fine_tuning/jobs(CreateFineTuningJobRequest payload, map<string|string[]> headers) returns FineTuningJob|errorCreates a fine-tuning job which begins the process of creating a new model from a given dataset.

Response includes details of the enqueued job including job status and the name of the fine-tuned models once complete.

Parameters

- payload CreateFineTuningJobRequest -

Return Type

- FineTuningJob|error - OK

post fine_tuning/jobs/[string fine_tuning_job_id]/cancel

function post fine_tuning/jobs/[string fine_tuning_job_id]/cancel(map<string|string[]> headers) returns FineTuningJob|errorImmediately cancel a fine-tune job.

Return Type

- FineTuningJob|error - OK

Records

openai.finetunes: ClientHttp1Settings

Provides settings related to HTTP/1.x protocol.

Fields

- keepAlive KeepAlive(default http:KEEPALIVE_AUTO) - Specifies whether to reuse a connection for multiple requests

- chunking Chunking(default http:CHUNKING_AUTO) - The chunking behaviour of the request

- proxy ProxyConfig? - Proxy server related options

openai.finetunes: ConnectionConfig

Provides a set of configurations for controlling the behaviours when communicating with a remote HTTP endpoint.

Fields

- auth BearerTokenConfig - Configurations related to client authentication

- httpVersion HttpVersion(default http:HTTP_2_0) - The HTTP version understood by the client

- http1Settings ClientHttp1Settings? - Configurations related to HTTP/1.x protocol

- http2Settings ClientHttp2Settings? - Configurations related to HTTP/2 protocol

- timeout decimal(default 60) - The maximum time to wait (in seconds) for a response before closing the connection

- forwarded string(default "disable") - The choice of setting

forwarded/x-forwardedheader

- poolConfig PoolConfiguration? - Configurations associated with request pooling

- cache CacheConfig? - HTTP caching related configurations

- compression Compression(default http:COMPRESSION_AUTO) - Specifies the way of handling compression (

accept-encoding) header

- circuitBreaker CircuitBreakerConfig? - Configurations associated with the behaviour of the Circuit Breaker

- retryConfig RetryConfig? - Configurations associated with retrying

- responseLimits ResponseLimitConfigs? - Configurations associated with inbound response size limits

- secureSocket ClientSecureSocket? - SSL/TLS-related options

- proxy ProxyConfig? - Proxy server related options

- validation boolean(default true) - Enables the inbound payload validation functionality which provided by the constraint package. Enabled by default

openai.finetunes: CreateFileRequest

Fields

- file record { fileContent byte[], fileName string } - The File object (not file name) to be uploaded.

- purpose "assistants"|"batch"|"fine-tune"|"vision" - The intended purpose of the uploaded file. Use "assistants" for Assistants and Message files, "vision" for Assistants image file inputs, "batch" for Batch API, and "fine-tune" for Fine-tuning.

openai.finetunes: CreateFineTuningJobRequest

Fields

- model string|"babbage-002"|"davinci-002"|"gpt-3.5-turbo" - The name of the model to fine-tune. You can select one of the supported models.

- training_file string - The ID of an uploaded file that contains training data.

See upload file for how to upload a file.

Your dataset must be formatted as a JSONL file. Additionally, you must upload your file with the purpose

fine-tune. The contents of the file should differ depending on if the model uses the chat or completions format. See the fine-tuning guide for more details.

- hyperparameters CreateFineTuningJobRequest_hyperparameters? -

- suffix string? - A string of up to 18 characters that will be added to your fine-tuned model name.

For example, a

suffixof "custom-model-name" would produce a model name likeft:gpt-3.5-turbo:openai:custom-model-name:7p4lURel.

- validation_file string? - The ID of an uploaded file that contains validation data.

If you provide this file, the data is used to generate validation

metrics periodically during fine-tuning. These metrics can be viewed in

the fine-tuning results file.

The same data should not be present in both train and validation files.

Your dataset must be formatted as a JSONL file. You must upload your file with the purpose

fine-tune. See the fine-tuning guide for more details.

- integrations CreateFineTuningJobRequest_integrations[]? - A list of integrations to enable for your fine-tuning job.

- seed int? - The seed controls the reproducibility of the job. Passing in the same seed and job parameters should produce the same results, but may differ in rare cases. If a seed is not specified, one will be generated for you.

openai.finetunes: CreateFineTuningJobRequest_hyperparameters

The hyperparameters used for the fine-tuning job.

Fields

- batch_size "auto"|int(default "auto") - Number of examples in each batch. A larger batch size means that model parameters are updated less frequently, but with lower variance.

- learning_rate_multiplier "auto"|decimal(default "auto") - Scaling factor for the learning rate. A smaller learning rate may be useful to avoid overfitting.

- n_epochs "auto"|int(default "auto") - The number of epochs to train the model for. An epoch refers to one full cycle through the training dataset.

openai.finetunes: CreateFineTuningJobRequest_integrations

Fields

- 'type "wandb" - The type of integration to enable. Currently, only "wandb" (Weights and Biases) is supported.

- wandb CreateFineTuningJobRequest_wandb -

openai.finetunes: CreateFineTuningJobRequest_wandb

The settings for your integration with Weights and Biases. This payload specifies the project that metrics will be sent to. Optionally, you can set an explicit display name for your run, add tags to your run, and set a default entity (team, username, etc) to be associated with your run.

Fields

- project string - The name of the project that the new run will be created under.

- name string? - A display name to set for the run. If not set, we will use the Job ID as the name.

- entity string? - The entity to use for the run. This allows you to set the team or username of the WandB user that you would like associated with the run. If not set, the default entity for the registered WandB API key is used.

- tags string[]? - A list of tags to be attached to the newly created run. These tags are passed through directly to WandB. Some default tags are generated by OpenAI: "openai/finetune", "openai/{base-model}", "openai/{ftjob-abcdef}".

openai.finetunes: DeleteFileResponse

Fields

- id string -

- 'object "file" -

- deleted boolean -

openai.finetunes: DeleteModelResponse

Fields

- id string -

- deleted boolean -

- 'object string -

openai.finetunes: FineTuningIntegration

Fields

- 'type "wandb" - The type of the integration being enabled for the fine-tuning job

- wandb CreateFineTuningJobRequest_wandb -

openai.finetunes: FineTuningJob

The fine_tuning.job object represents a fine-tuning job that has been created through the API.

Fields

- id string - The object identifier, which can be referenced in the API endpoints.

- created_at int - The Unix timestamp (in seconds) for when the fine-tuning job was created.

- 'error FineTuningJob_error -

- fine_tuned_model string - The name of the fine-tuned model that is being created. The value will be null if the fine-tuning job is still running.

- finished_at int - The Unix timestamp (in seconds) for when the fine-tuning job was finished. The value will be null if the fine-tuning job is still running.

- hyperparameters FineTuningJob_hyperparameters -

- model string - The base model that is being fine-tuned.

- 'object "fine_tuning.job" - The object type, which is always "fine_tuning.job".

- organization_id string - The organization that owns the fine-tuning job.

- status "validating_files"|"queued"|"running"|"succeeded"|"failed"|"cancelled" - The current status of the fine-tuning job, which can be either

validating_files,queued,running,succeeded,failed, orcancelled.

- trained_tokens int - The total number of billable tokens processed by this fine-tuning job. The value will be null if the fine-tuning job is still running.

- integrations (FineTuningIntegration)[]? - A list of integrations to enable for this fine-tuning job.

- seed int - The seed used for the fine-tuning job.

- estimated_finish int? - The Unix timestamp (in seconds) for when the fine-tuning job is estimated to finish. The value will be null if the fine-tuning job is not running.

openai.finetunes: FineTuningJob_error

For fine-tuning jobs that have failed, this will contain more information on the cause of the failure.

Fields

- code string? - A machine-readable error code.

- message string? - A human-readable error message.

- param string? - The parameter that was invalid, usually

training_fileorvalidation_file. This field will be null if the failure was not parameter-specific.

openai.finetunes: FineTuningJob_hyperparameters

The hyperparameters used for the fine-tuning job. See the fine-tuning guide for more details.

Fields

- n_epochs "auto"|int - The number of epochs to train the model for. An epoch refers to one full cycle through the training dataset. "auto" decides the optimal number of epochs based on the size of the dataset. If setting the number manually, we support any number between 1 and 50 epochs.

openai.finetunes: FineTuningJobCheckpoint

The fine_tuning.job.checkpoint object represents a model checkpoint for a fine-tuning job that is ready to use.

Fields

- id string - The checkpoint identifier, which can be referenced in the API endpoints.

- created_at int - The Unix timestamp (in seconds) for when the checkpoint was created.

- fine_tuned_model_checkpoint string - The name of the fine-tuned checkpoint model that is created.

- step_number int - The step number that the checkpoint was created at.

- metrics FineTuningJobCheckpoint_metrics -

- fine_tuning_job_id string - The name of the fine-tuning job that this checkpoint was created from.

- 'object "fine_tuning.job.checkpoint" - The object type, which is always "fine_tuning.job.checkpoint".

openai.finetunes: FineTuningJobCheckpoint_metrics

Metrics at the step number during the fine-tuning job.

Fields

- step decimal? -

- train_loss decimal? -

- train_mean_token_accuracy decimal? -

- valid_loss decimal? -

- valid_mean_token_accuracy decimal? -

- full_valid_loss decimal? -

- full_valid_mean_token_accuracy decimal? -

openai.finetunes: FineTuningJobEvent

Fine-tuning job event object

Fields

- id string -

- created_at int -

- level "info"|"warn"|"error" -

- message string -

- 'object "fine_tuning.job.event" -

openai.finetunes: ListFilesQueries

Represents the Queries record for the operation: listFiles

Fields

- purpose string? - Only return files with the given purpose.

openai.finetunes: ListFilesResponse

Fields

- data OpenAIFile[] -

- 'object "list" -

openai.finetunes: ListFineTuningEventsQueries

Represents the Queries record for the operation: listFineTuningEvents

Fields

- 'limit int(default 20) - Number of events to retrieve.

- after string? - Identifier for the last event from the previous pagination request.

openai.finetunes: ListFineTuningJobCheckpointsQueries

Represents the Queries record for the operation: listFineTuningJobCheckpoints

Fields

- 'limit int(default 10) - Number of checkpoints to retrieve.

- after string? - Identifier for the last checkpoint ID from the previous pagination request.

openai.finetunes: ListFineTuningJobCheckpointsResponse

Fields

- data FineTuningJobCheckpoint[] -

- 'object "list" -

- first_id string? -

- last_id string? -

- has_more boolean -

openai.finetunes: ListFineTuningJobEventsResponse

Fields

- data FineTuningJobEvent[] -

- 'object "list" -

openai.finetunes: ListModelsResponse

Fields

- 'object "list" -

- data Model[] -

openai.finetunes: ListPaginatedFineTuningJobsQueries

Represents the Queries record for the operation: listPaginatedFineTuningJobs

Fields

- 'limit int(default 20) - Number of fine-tuning jobs to retrieve.

- after string? - Identifier for the last job from the previous pagination request.

openai.finetunes: ListPaginatedFineTuningJobsResponse

Fields

- data FineTuningJob[] -

- has_more boolean -

- 'object "list" -

openai.finetunes: Model

Describes an OpenAI model offering that can be used with the API.

Fields

- id string - The model identifier, which can be referenced in the API endpoints.

- created int - The Unix timestamp (in seconds) when the model was created.

- 'object "model" - The object type, which is always "model".

- owned_by string - The organization that owns the model.

openai.finetunes: OpenAIFile

The File object represents a document that has been uploaded to OpenAI.

Fields

- id string - The file identifier, which can be referenced in the API endpoints.

- bytes int - The size of the file, in bytes.

- created_at int - The Unix timestamp (in seconds) for when the file was created.

- filename string - The name of the file.

- 'object "file" - The object type, which is always

file.

- purpose "assistants"|"assistants_output"|"batch"|"batch_output"|"fine-tune"|"fine-tune-results"|"vision" - The intended purpose of the file. Supported values are

assistants,assistants_output,batch,batch_output,fine-tune,fine-tune-resultsandvision.

- status "uploaded"|"processed"|"error" - Deprecated. The current status of the file, which can be either

uploaded,processed, orerror.

- status_details string? - Deprecated. For details on why a fine-tuning training file failed validation, see the

errorfield onfine_tuning.job.

openai.finetunes: ProxyConfig

Proxy server configurations to be used with the HTTP client endpoint.

Fields

- host string(default "") - Host name of the proxy server

- port int(default 0) - Proxy server port

- userName string(default "") - Proxy server username

- password string(default "") - Proxy server password

Import

import ballerinax/openai.finetunes;Metadata

Released date: over 1 year ago

Version: 2.0.0

License: Apache-2.0

Compatibility

Platform: any

Ballerina version: 2201.9.3

GraalVM compatible: Yes

Pull count

Total: 40

Current verison: 24

Weekly downloads

Keywords

AI/Fine-tunes

OpenAI

Cost/Paid

Files

Models

Vendor/OpenAI

Contributors